NCBI Bookshelf. A service of the National Library of Medicine, National Institutes of Health.

National Research Council (US) Committee on Capitalizing on Science, Technology, and Innovation: An Assessment of the Small Business Innovation Research Program. An Assessment of the Small Business Innovation Research Program: Project Methodology. Washington (DC): National Academies Press (US); 2004.

An Assessment of the Small Business Innovation Research Program: Project Methodology.

Show details1. Introduction

As the Small Business Innovation Research (SBIR) program approached its twentieth year of operation, the U.S. Congress requested that the National Research Council (NRC) conduct a “comprehensive study of how the SBIR program has stimulated technological innovation and used small businesses to meet federal research and development needs,” and to make recommendations on improvements to the program.1

Mandated as a part of SBIR's renewal in 2000, the NRC study is to assess the SBIR program as administered at the five federal agencies that together make up 96 percent of SBIR program expenditures. The agencies, in order of program size, are DoD, NIH, NASA, DoE, and NSF.

The objective of the study is not to consider if SBIR should exist or not—Congress has already decided affirmatively on this question. Rather, the NRC Committee conducting this study is charged with providing assessment-based findings to improve public understanding of the program as well as recommendations to improve the program's effectiveness.

In addition to setting out the study objectives, this report defines key concepts, identifies potential metrics and data sources, and describes the range of methodological approaches being developed by the NRC to assess the SBIR program. Following some historical background on the SBIR program, this introduction outlines the basic parameters of this NRC study.

A Brief History of the SBIR Program

In the 1980s, the country's slow pace in commercializing new technologies—compared especially with the global manufacturing and marketing success of Japanese firms in autos, steel, and semiconductors—led to serious concern in the United States about the nation's ability to compete. U.S. industrial competitiveness in the 1980s was frequently cast in terms of American industry's failure “to translate its research prowess into commercial advantage.”2 The pessimism of some was reinforced by evidence of slowing growth at corporate research laboratories that had been leaders of American innovation in the postwar period and the apparent success of the cooperative model exemplified by some Japanese kieretsu.3

Yet, even as larger firms were downsizing to improve their competitive posture, a growing body of evidence, starting in the late 1970s and accelerating in the 1980s, began to indicate that small businesses were assuming an increasingly important role in both innovation and job creation.4 Research by David Birch and others suggested that national policies should promote and build on the competitive strength offered by small businesses.5 In addition to considerations of economic growth and competitiveness, SBIR was also motivated by concerns that small businesses were being disadvantaged vis-à-vis larger firms in competition for R&D contracts. Federal commissions from as early as the 1960s had recommended the direction of R&D funds toward small businesses.6 These recommendations, however, were opposed by competing recipients of R&D funding. Although small businesses were beginning to be recognized by the late-1970s as a potentially fruitful source of innovation, some in government remained wary of funding small firms focused on high-risk technologies with commercial promise. The concept of early-stage financial support for high-risk technologies with commercial promise was first advanced by Roland Tibbetts at the National Science Foundation (NSF). As early as 1976, Mr. Tibbetts advocated that the NSF should increase the share of its funds going to small business. When NSF adopted this initiative, small firms were enthused and proceeded to lobby other agencies to follow NSF's lead. When there was no immediate response to these efforts, small businesses took their case to Congress and higher levels of the Executive branch.7 In response, a White House Conference on Small Business was held in January 1980 under the Carter Administration. The conference's recommendation to proceed with a program for small business innovation research was grounded in:

- Evidence that a declining share of federal R&D was going to small businesses;

- Broader difficulties among small businesses in raising capital in a period of historically high interest rates; and

- Research suggesting that small businesses were fertile sources of job creation. Congress responded under the Reagan Administration with the passage of the Small Business Innovation Research Development Act of 1982, which established the SBIR program.8

The SBIR Development Act of 1982

The new SBIR program initially required agencies with R&D budgets in excess of $100 million to set aside 0.2 percent of their funds for SBIR. This amount totaled $45 million in 1983, the program's first year of operation. Over the next 6 years, the set-aside grew to 1.25 percent.9

The legislation authorizing SBIR had two broad goals:

- “to more effectively meet R&D needs brought on by the utilization of small innovative firms (which have been consistently shown to be the most prolific sources of new technologies) and

- to attract private capital to commercialize the results of federal research.”

SBIR's Structure and Role

As conceived in the 1982 Act, SBIR's grant-making process is structured in three phases:

- Phase I is essentially a feasibility study in which award winners undertake a limited amount of research aimed at establishing an idea's scientific and commercial promise. Today, the legislation anticipates Phase I grants as high as $100,000.10

- Phase II grants are larger – normally $750,000 – and fund more extensive R&D to further develop the scientific and technical merit and the feasibility of research ideas.

- Phase III. This phase normally does not involve SBIR funds, but is the stage at which grant recipients should be obtaining additional funds either from a procurement program at the agency that made the award, from private investors, or from the capital markets. The objective of this phase is to move the technology to the prototype stage and into the marketplace.

Phase III of the program is often fraught with difficulty for new firms. In practice, agencies have developed different approaches to facilitating this transition to commercial viability; not least among them are additional SBIR awards.11 Some firms with more experience with the program have become skilled in obtaining additional awards. Previous NRC research showed that different firms have quite different objectives in applying to the program. Some seek to demonstrate the potential of promising research. Others seek to fulfill agency research requirements on a cost-effective basis. Still others seek a certification of quality (and the additional awards that can come from such recognition) as they push science-based products toward commercialization.12 Given this variation and the fact that agencies do not maintain data on Phase III, quantifying the contribution of Phase III is difficult.

The 1992 and 2000 SBIR Reauthorizations

The SBIR program approached reauthorization in 1992 amidst continued worries about the U.S. economy's capacity to commercialize inventions. Finding that “U.S. technological performance is challenged less in the creation of new technologies than in their commercialization and adoption,” the National Academy of Sciences at the time recommended an increase in SBIR funding as a means to improve the economy's ability to adopt and commercialize new technologies.13

Accordingly, the Small Business Research and Development Enhancement Act (P.L. 102-564), which reauthorized the program until September 30, 2000, doubled the set-aside rate to 2.5 percent.14 This increase in the percentage of R&D funds allocated to the program was accompanied by a stronger emphasis on encouraging the commercialization of SBIR-funded technologies.15 Legislative language explicitly highlighted commercial potential as a criterion for awarding SBIR grants. For Phase I awards, Congress directed program administrators to assess whether projects have “commercial potential” in addition to scientific and technical merit when evaluating SBIR applications. With respect to Phase II, evaluation of a project's commercial potential was to consider, additionally, the existence of second-phase funding commitments from the private sector or other non-SBIR sources. Evidence of third-phase follow-on commitments, along with other indicators of commercial potential, was also sought. Moreover, the 1992 reauthorization directed that a small business' record of commercialization be taken into account when considering the Phase II application.16

The Small Business Reauthorization Act of 2000 (P.L. 106-554) again extended SBIR until September 30, 2008. It also called for an assessment by the National Research Council of the broader impacts of the program, including those on employment, health, national security, and national competitiveness.17

Previous NRC Assessments of SBIR

Despite its size and tenure, the SBIR program has not been comprehensively examined. There have been some previous studies focusing on specific aspects or components of the program—notably by the General Accounting Office and the Small Business Administration.18 There are, as well, a limited number of internal assessments of agency programs.19 The academic literature on SBIR is also limited.20Annex E provides a bibliography of SBIR as well as more general references of interest.

Against this background, the National Academies' Committee for Government-Industry Partnerships for the Development of New Technologies—under the leadership of its chairman, Gordon Moore—undertook a review of the SBIR program, its operation, and current challenges. The Committee convened government policy makers, academic researchers, and representatives of small business on February 28, 1998 for the first comprehensive discussion of the SBIR program's history and rationale, review existing research, and identify areas for further research and program improvements.21

The Moore Committee reported that:

- SBIR enjoyed strong support both within and outside the Beltway.

- At the same time, the size and significance of SBIR underscored the need for more research on how well it is working and how its operations might be optimized.

- There should be additional clarification about the primary emphasis on commercialization within SBIR, and about how commercialization is defined.

- There should also be clarification on how to evaluate SBIR as a single program that is applied by different agencies in different ways.22

Subsequently, at the request of the DoD, the Moore Committee was asked to review the operation of the SBIR program at Defense, and in particular the role played by the Fast Track Initiative. This resulted in the largest and most thorough review of an SBIR program to date. The review involved substantial original field research, with 55 case studies, as well as a large survey of award recipients. It found that the SBIR program at Defense was contributing to the achievement of mission goals—funding valuable innovative projects—and that a significant portion of these projects would not have been undertaken in the absence of the SBIR funding. The Moore Committee's assessment also found that the Fast Track Program increases the efficiency of the DoD SBIR program by encouraging the commercialization of new technologies and the entry of new firms to the program.

More broadly, the Moore Committee found that SBIR facilitates the development and utilization of human capital and technological knowledge. Case studies have shown that the knowledge and human capital generated by the SBIR program has economic value, and can be applied by other firms. And through the certification function, it noted, SBIR awards encourage further private sector investment in the firm's technology.

Based on this and other assessments of public private partnerships, the Moore Committee's Summary Report on U.S. Government-Industry Partnerships recommended that “regular and rigorous program-based evaluations and feedback is essential for effective partnerships and should be a standard feature,” adding that “greater policy attention and resources to the systematic evaluation of U.S. and foreign partnerships should be encouraged.”23

Preparing the Current Assessment of SBIR

As noted, the legislation mandating the current assessment of the nation's SBIR program focuses on the five agencies that account for 96 percent of program expenditures (although the National Research Council is seeking to learn of the views and practices of other agencies administering the program as well.) The mandated agencies, in order of program size, are the Department of Defense, the National Institutes of Health, the National Aeronautics and Space Administration, the Department of Energy, and the National Science Foundation. Following the passage of H.R. 5667 in December 2000, extensive discussions were held between the NRC and the responsible agencies on the scope and nature of the mandated study. Agreement on the terms of the study, formalized in a Memorandum of Understanding, was reached in December 2001 (See Annex B), and the funding necessary for the Academies to begin the study was received in September 2002. The study was officially launched on 1 October 2002.

The study will be conducted within the framework provided by the legislation and the NRC's contracts with the five agencies. These contracts identify the following principal tasks:

- Collection and analysis of agency databases and studies,

- Survey of firms and agencies,

- Conduct of case studies organized around a common template, and;

- Review and analysis of survey and case study results and program accomplishments.

As per the Memorandum of Understanding between the NRC and the agencies, the study is structured in two-phases. Phase I of the study, beginning on October 2002, focuses on identifying data collection needs and the development of a research methodology. Phase II of the study, anticipated to start in 2004, will implement the research methodology developed in Phase I of the study.

This document outlines the methodological approach being developed under Phase I of the study. It introduces many of the methodological questions to be encountered during Phase II of the NRC study. Finally, it outlines strategies for resolving these questions, recognizing that some issues can only be resolved in the context of the study itself.

Given that agencies covered in this study differ in their objectives and goals, the assessment will necessarily be agency-specific.24 As appropriate, the Committee will draw useful inter-agency comparisons and multiyear comparisons. In this regard, a table with the agencies in one dimension and all of the identified SBIR objectives in the other may be a useful expository tool. The study will build on the methodological models developed for the 1999 NRC study of the DoD's Fast Track initiative, as appropriate, clearly recognizing that the broader and different scope of the current study will require some adjustments.25 Additional areas of interest, as recognized by the Committee, may also be pursued as time and resources permit.

2. An Overview of the Study Process

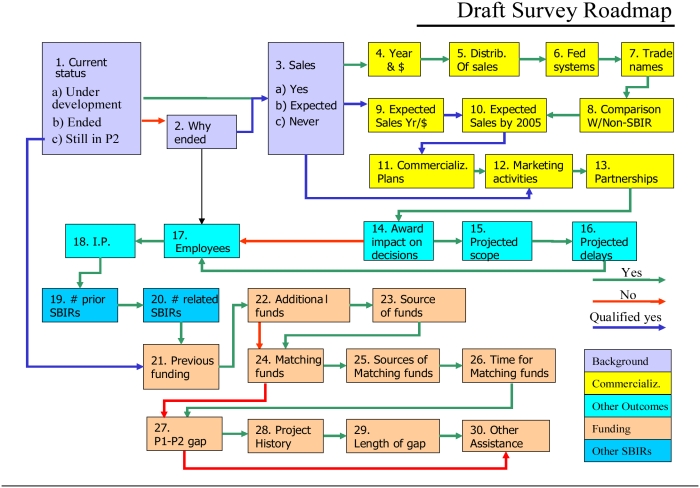

Following its approval of the broad study parameters of the study in October 2002, the Committee set out an overall roadmap to guide the research process. Tasks included are the development a set of operational definitions, the identification of detailed metrics, the review existing data sources, and the development of primary research methodologies. Closely interrelated, these tasks will be addressed iteratively. (These iterative tasks are represented in the box within Figure 1.) Following completion of field research, the Committee will conduct its analysis and assessment and will issue its findings and recommendations.

The elements of this multi-step process are detailed below:

- Agree on initial guidelines. These initial guidelines are based on the legislation, the Memorandum of Understanding, and contracts.

- Clarify objectives. What central questions must the study answer? What other interesting but optional questions should be addressed? What questions will specifically not be considered? This is discussed further in Section 3 of this chapter.

- Develop operational definitions: For example, while Congress has mandated that the study address the extent to which SBIR supports the agencies' missions, the Committee needs to develop operational definitions of “support” and “agency mission,” in collaboration with agency managers responsible for program operations. This is a necessary step before developing the relevant metrics. This is discussed further in Section 4 of this chapter.

- Identify metrics for addressing study objectives. The Committee will determine extent of commercialization fostered by SBIR—measured in terms of products procured by agencies, commercial sales, licensing revenue, or other metrics. This is discussed further in Section 5 of this chapter.

- Identify data sources. Implementation of agreed metrics requires data. A wide mix of data sources will be used, so the availability of existing data and the feasibility of collecting needed data by different methods will also condition the selection of metrics, and the choice of study methods. The existence or absence of specific methodologies and data sets will undoubtedly lead to the modification, adoption, or elimination of specific metrics and methods. This is discussed further in Section 6 of this chapter.

- Develop primary research methodologies. The study's primary research components will include interviews, surveys, and case studies to supplement existing data. Control groups and counterfactual approaches will be used where feasible and appropriate to isolate the effects of the SBIR program. Other evaluation methods may also be used on a limited basis as needed to address questions not effectively addressed by the principal methods. This is discussed further in Section 7 of this report.

- Complete Phase I. Phase I of the NRC study will be formally completed once a set of methodologies is developed and documented, is approved by the Committee, and passes successfully through the Academy's peer review process.

- Implement the research program (NRC Study Phase II). The variety of tasks involved in implementing the research program is previewed in Annex I of this report.

- Prepare agency-specific reports. Results from the research program will be presented in five agency-specific reports—one for each of the agencies. Where appropriate, agency-specific findings and recommendations will be formulated by the relevant study subcommittee for review and approval by the full Committee.

- Prepare overview report. A separate summary report, buttressed by the relevant commissioned work and bringing together the findings of the individual agency reports, along with general recommendations, will be produced for distribution. This final report will also draw out, as appropriate, the contrasts and similarities among the agencies in the way they administer SBIR. It will follow the approval procedure outlined above.

- Organize public meetings to review and discuss findings. Following report review, findings and recommendations will be presented publicly for information, review, and comment.

- Submit reports to Congress.

- Disseminate findings broadly.

3. Clarifying Study Objectives

Three primary documents condition and define the objectives for this study: These are the Legislation—H.R. 5667 [Annex A], the NAS contracs accepted by the five agencies [Annex B], and the NAS-Agencies Memorandum of Understanding [Annex C]. Based on these three documents, the team's first task is to develop a comprehensive and agreed set of practical objectives that can be reviewed and ultimately approved by the Committee.

The Legislation charges the NRC to “conduct a comprehensive study of how the SBIR program has stimulated technological innovation and used small businesses to meet Federal research and development needs.” H.R. 5667 includes a range of questions [see Annex A]. According to the legislation, the study should:

- review the quality of SBIR research;

- review the SBIR program's value to the agency's mission;

- assess the extent to which SBIR projects achieve some measure of commercialization;

- evaluate economic and non-economic benefits;

- analyze trends in agency R&D support for small business since 1983;

- analyze—for SBIR Phase II awardees—the incidence of follow-on contracts (procurement or non-SBIR Federal R&D)

- perform additional analysis as required to consider specific recommendations on:

- measuring outcomes for agency strategy and performance;

- possibly opening Phase II SBIR competitions to all qualifying small businesses (not just SBIR Phase I winners);

- recouping SBIR funds when companies are sold to foreign purchasers and large companies;

- increasing Federal procurement of technologies produced by small business;

- improving the SBIR program.

Items under (g) are questions raised by the Congress that will be considered along with other areas of possible recommendation once the data analysis is complete.

The NAS proposal accepted by the agencies on a contractual basis adds a specific focus on commercialization following awards, and “broader policy issues associated with public-private collaborations for technology development and government support for high technology innovation, including bench-marking of foreign programs to encourage small business development.” The proposal includes SBIR's contribution to economic growth and technology development in the context of the economic and non-economic benefits listed in the legislation.

SBIR does seek to meet a number of distinctly different objectives with a single program, and there is no clear guidance from Congress about their relative importance. The methodology developed to date assumes that each of the key objectives must be assessed separately, that it will be possible to draw some conclusions about each of the primary objectives, and that it will be possible to draw some comparisons between those assessments. Balancing these different objectives by weighing the Committee assessment is a matter for Congress to decide.

At the core of the study is the need to determine how far the SBIR program has evolved from merely requiring more mission agency R&D to be purchased from small firms to an investment in new product innovation that might or might not be purchased later by the agency.

4. Developing Operational Definitions and Concepts

The study will identify core operational terms and concepts in advance of full development of the methodology. The following represents an initial identification of some terms and concepts:

The quality of SBIR research

Quality is a relative concept by definition, so an assessment of SBIR research quality must compare it to the quality of other research.26 Quality is also subjective, so the realization of value may depend on its perceived utility. The principal comparison here will be with other extra-mural research funded by the same agencies. The question of whether comparisons should focus only on R&D by other small businesses is yet to be addressed; this decision may be made on an agency-by-agency basis.27

SBIR's value to agency missions

Given that agency missions and their associated sub-unit objectives differ substantially among, (and even within) agencies, the issue of SBIR's value to agency missions will be addressed largely in the context of individual agency analysis. While a more generic set of answers would be helpful, it will be important to emphasize the challenges posed by multiple agencies with multiple missions, executed by multiple subunits. For example, some agencies, such as DoD and NASA, are “procurement agencies,” seeking tools for the nation's military, while others, such as NSF and NIH, are not. These different goals may change the agency's vision of SBIR's role quite fundamentally. Generic mission elements include:

- Technology needs (i.e., agency-identified technology gaps, such as a missing vaccine delivery system identified as a priority by NIH28);

- Procurement needs (i.e., technologies that the agency needs for its own internal use, e.g., optical advances for smart weapons at DoD).Expansion or commercialization of knowledge in agency's field of stewardship (e.g., funding in relatively broad sub-fields of information technology at NSF);29

- Technology transfer (i.e., promoting the adoption of agency-developed technology by others). Technology may become available to the sponsoring agency and others through a variety of paths. These include

- Procurement from supply chain providers,

- Purchase by the agency on the open market via successful commercialization by the SBIR firm (e.g., purchase of DoD-R&D-supported advanced sonar equipment),

- Use by others of the technology whose development is sponsored by the agency and made available through such means as licensing, partnership arrangements, or by purchase on the open market. (e.g., a power plant may adopt technology available on the market and fostered by DoE's SBIR, or a public health clinic may adopt a new vaccine delivery system available on the market and fostered by NIH's SBIR).

To address agency-specific missions (e.g., national defense at DoD, health at NIH, energy at DoE), the Committee will closely consult with agency staff to develop operational definitions of success--in some cases at the level of sub-units (e.g., individual NIH institutes and centers.) Some overlay will likely occur (e.g., defense-health needs.)

Finally, agencies will undoubtedly have their own conceptions of how their SBIR program is judged in relation to their missions, and it is possible (perhaps likely) that some of these views will not fit well in the areas listed. The Committee is sensitive to these distinctions and differences, and will articulate these concepts at an early stage.

The extent of commercialization

SBIR is charged with supporting the commercialization of technologies developed with federal government support. In many agencies, this requirement is articulated as a focus on the “commercialization” of SBIR supported research.30 At the simplest level, commercialization means, “reaching the market,” which some agency managers interpret as “first sale”: the first sale of a product in the market place, whether to public or private sector clients.31 This definition is certainly practical and defensible. However, it risks missing significant components of commercialization that do not result in a discrete sale. At the same time, it also fails to provide any guidance on how to evaluate the scale of commercialization, which is critical to assessing the degree to which SBIR programs successfully encourage commercialization: the sale of a single widget is not the same as playing a critical role in the original development of Qualcomm's cell-phone technology.

Thus, the Committee's assessment of commercialization will require working operational definitions for a number of components. These include:

- Sales—what constitutes a sale?

- Application—how is the product used? For example, products like software are re-used repeatedly.

- Measuring scale—over what interval is the impact to be measured. (e.g., Qualcomm's SBIR grant was by all accounts very important for the company. The question arises as to how long the dollar value of Qualcomm's wireless related sales, stemming from its original SBIR grant, should be counted.)32

- Licensing—how should commercial sales generated by third party licensees of the original technology be counted. Is the licensing revenue from the licensee to be counted, or the sales of that technology by the licensee—(or both)?

- Complex sales—technologies are often sold as bundles with other technologies (auto engines with mufflers for example). Given this, how is the share of the total sales value attributable to the technology that received SBIR funding to be defined?

- Lags—some technologies reach market rapidly, but others can take 10 years or more. What is an appropriate discount rate and timeframe to measure award impact?

Metrics for assessing commercialization can be elusive. Notably, one cannot easily calculate the full value of developed “enabling technology” that can be used across industries. Also elusive is the value of material that enables a commercial service. In such cases, a qualitative approach to “commercialization” will need to be employed.

While the theoretical concept of additionality will be of some relevance to these questions, practicalities must govern, and the availability of data will substantially shape the Committee's approach in this area. This is particularly the case where useful data must be gathered from thousands of companies, often at very considerable expense in dollars and time. 33

The NRC study will resolve these very practical questions by the early stages of the study's second phase. The Committee plans to adapt, where appropriate, definitions and approaches used in the Fast Track study for the current study.34

Broad economic effects

SBIR programs may generate a wide range of economic effects. While some of these may be best considered in a national context, others fall more directly on participating firms and on the agencies themselves. The Committee will consider these possible benefits and costs in terms of the level of incidence.

Participating firms

Economic effects on firms include some or all of the following elements:

- Revenue from sale or adoption of SBIR-developed products, services, or processes (this tracks quite closely but not 100 percent with commercialization)

- Changes in the firm's access to capital, including ways in which SBIR awards have helped (or hindered) recipient companies access capital markets

- Change in firm viability and sustainability, including how the SBIR program helped bridge the gaps between these interrelated stages of the innovation process;35

- Conception

- Innovation

- Product development

- Entry into market

- Changes in the propensity to partner and the nature of the partnerships

- The impact of SBIR on the frequency with which companies develop partnerships

- The nature of the partnerships—are they public or private partners?

- Enhanced firm growth, productivity, profitability

- Change in employment and capitalization36

- Change in firm productivity

- Change in profits

- Intellectual property developed by the firm

- Work in this section will follow closely on the Fast Track study model, seeking to identify ways in which the recipient firms were affected in the areas listed.37

The agencies

Effects on the agencies include the following:

- Effects on mission support

- Effects on agency research efficiency, including whether –

- SBIR has helped to generate technologies that agencies might not otherwise have developed in the same timeframe without the program

- SBIR is an effective way for agencies to fund competitive research, presumably compared to non-SBIR research funding for small scale requirements

- There are significant benefits to agency missions from the specific effort of SBIR to capture research by small firms. Benchmark numbers for small business contributions to agency research programs before SBIR (pre-1983), and outside SBIR (other programs?) may be needed.

Research efficiency implies a review of the returns to the agency from SBIR investment vs. other research investment. It is important to acknowledge, however, that this analysis will likely not be based on hard rate of return analysis, because the data necessary for such analysis is unlikely to be available at the agency level.

- Effects on agency procurement efficiency

Effects on society

- Social returns refer to the returns to society at large, including private returns and spillover effects. Using the Link/Scott approach from Fast Track as a model is expected to help us conceptualize our approach to this broad effect.38 Other approaches may also be useful.

- Small business support refers to the positive social externalities associated with a vibrant small business sector, including community cohesiveness and improvements to life made possible new products. Measuring the impact of program support for small business is a major objective,39 given that support encourages the commercialization of public investment in R&D, the achievement of national missions, and the encouragement of small firm growth.

- Training in both business development and in technology and innovation The Audtresch/ J. Weigand/ C. Weigand Fast Track paper provides a good methodological basis for addressing “training.”40

Non-economic benefits

While it is possible to view almost all non-economic effects through the lens of economic analysis, pushing all effects to economic measurement is usually not feasible and may not be appropriate. Certain effects have been specifically defined as positive outcomes by Congress, regardless of whether they have any measurable impact on economic well-being. This section addresses non-economic benefits, which will in turn have non-economic metrics attached to them, discussed later in this paper.

Knowledge benefits

The missions of several agencies explicitly state the requirement of advancing knowledge in the relevant field. For the SBIR program, this requirement can be viewed from two distinct but complementary perspectives:

- Intellectual property,41 which is governed by a set of legal definitions, and is susceptible to close measurement via analysis of patent filings and other largely quantitative assessment strategies.42 Intellectual property rights are generally used to convert knowledge to property for commercial benefit of the owner. At the same time, mechanisms of intellectual property can help to disseminate knowledge to others. A patent, for example, gives the holder exclusive rights, but provides information to others. Intellectual property also includes “trade secrets.” In many cases, the “know how” that firms keep proprietary may be the most important intellectual property produced by the research. These may be less susceptible to measurement.

- Non-property knowledge is much less well defined but nonetheless of great importance. Non-property knowledge ranges from formal activities (e.g., papers published in refereed journals, and seminars) to very informal activities (e.g., discussions among researchers and worker mobility). Many relevant concepts are discussed in the literature on human capital. Non-property knowledge is related to education and training and encompasses network capital and tacit expertise that an engineer or scientist may possess.

Other potential non-economic benefits

- Environmental impacts

- Safety

- Quality of life

Trends in agency funding for small business

For this study, “small business funding” will be defined as synonymous with SBIR. A definition of “small” is needed. The SBIR definition (fewer than 500 employees) is quite broad.43 Dividing firm participants into size subcategories may be advantageous. We plan a breakdown of small firms by size, taking into account existing SBA classifications and based on natural divisions as emerge from the data.

The agencies have considerable discretion in defining which agency expenditures and disbursements they consider to be R&D, and thus subject to the percentage requirements of the SBIR set aside. Small firms also receive R&D funding directly from the agencies outside the SBIR program, and receive subcontracts for R&D from primes or other subcontractors, whose original funding source was federal R&D. Since in some cases the prime or intermediate contractor may also be a small business, there is an opportunity for double counting as well as for undercounting. It is important to keep in mind that the congressional intent was to increase the amount of federal R&D funding ultimately reaching small businesses.

Small businesses, in many cases, cannot take on more R&D funding, as they do not have the expert staff, or the culture to do R&D. Thus, there might conceivably be a sort of saturation effect. The issue of absorptive capacity also occurs in the case of fast moving high tech firms, which may not willing to risk the overhead and delay involved in seeking federal funds at all. In the present study, saturation effects can be examined in part by investigating the relationship between the growth of grant-program funding and the growth of grant-program applications. Insights into the impacts of expansions in grant funding on small-business response capacity and on research quality may be gained by analyzing ATP's experience between 1993 and 1994, based on changes in reviewer technical scores and small business application rates as the program was expanded dramatically between 1993 and 1994.

Best practices and procedures in operating SBIR programs

Issues related to administrative process, both within agencies and across agencies, will be defined over the course of the first phase of the NRC study. Areas to be addressed may include:

- Outreach

- Topic development

- Application procedures and timelines

- Project monitoring

- Agency management funding

- Project funding limitations

- Bridge funding

- Post SBIR Phase II support

5. Potential Metrics for Addressing Study Objectives

In keeping with the definitions and concepts in the previous section, the NRC study will identify the desired measures for expressing results related to each of the objectives defined in section 3, Clarifying Study Objectives. It is important to note that the metrics ultimately used in the study will be selected partly based on their theoretical importance in answering critical questions, and partly based on practicalities. Here we list a set of draft metrics of clear utility to the study; not all will ultimately be adopted, and, as the research progresses; undoubtedly others will be developed as additional elements emerge as the study moves forward.

Research quality44

- Internal measures of research quality—These will be based on comparative survey results from agency managers with respect to the quality of SBIR-funded research versus the quality of other agency research. It is important here to recognize that standards and reviewer biases in the selection for SBIR awards in the selection of other awards may vary.

- External measures of research quality

- Peer-reviewed publications

- Citations

- Technology awards from organizations outside the SBIR agency

- Patents

- Patent Citations

Agency mission

Agency missions vary; for example procurement will not be relevant to NSF and NIH (and some of DoE) SBIR programs. The value of SBIR to the agency mission can best be addressed through surveys at the sub-unit manager level, similar to the approach demonstrated by Archibald and Finifter's (2000) Fast Track study, which provides a useful model in this area.45 These surveys will seek to address:

- The alignment between agency SBIR objectives and agency mission

- Agency-specific metrics (to be determined)

- Procurement:

- The rate at which agency procurement from small firms has changed since inception of SBIR;

- The change in the time elapsed between a proposal arriving on an agency's desk and the contract arriving at the small business;

- The rate at which SBIR firm involvement in procurement has changed over time;

- Comparison of SBIR-related procurement with other procurement emerging from extra-mural agency R&D;

- Technology procurement in the agency as a whole;

- Agency success metrics – how does the agency assess and reward management performance? Issues include

- Time elapsed between a proposal arriving on an agency's desk and the contract arriving at the small business.

- Minimization of lags in converting from SBIR Phase I to Phase II

Parallel data collection across the five agency SBIR programs is to compile year-by-year program demographics for approximately the last decade. Data compilation requests will include the number of applications, number of awards, ratio of awards to applications, and total dollars awarded for each phase of the multi-phase program. It will cover the geographical distribution of applicants, awards, and success rates; statistics on applications and awards by women-owned and minority-owned companies; statistics on commercialization strategies and outcomes; results of agency-initiated data collection and analysis; and uniform data from a set of case studies for each agency.

The Committee plans to draw on the following data collection instruments:

- Phase I recipient survey

- Phase II recipient survey

- SBIR program manager survey

- COTAR (technical point of contact) survey

- case data from selected cases

Data collected from these surveys and case studies will be added to existing public sources of data that will be used in the study, such as:

- all agency data covering award applications, awards, outcomes, and program management

- patent and citation data

- venture capital data

- census data

Additional data may be collected as a follow-up based on an analysis of response.

The study will examine the agency rates of transition between phases, pending receipt of the agency databases for applications and awards of Phase I and Phase II.

The Phase II survey will gather information on all Phase III activity including commercial sales, sales to the federal government, export sales, follow-on federal R&D contracts, further investment in the technology by various sources, marketing activities, and identification of commercial products or federal programs that incorporate the products. SBIR Program manager surveys and interviews will address federal efforts to exploit the results of phase II SBIR into phase III federal programs.

Commercialization

First order metrics for commercialization revolve around these basic areas:

- Sales (firm revenues)

- Direct sales in the open market as a percentage of total sales

- Indirect sales (e.g. bundled with other products and services) as a percent of total sales

- Licensing or sale of technology

- Contracts relating to products

- Contracts relating to the means of production or delivery—processes

- SBIR-related products, services, and processes procured by government agencies.

- Spin-off of firms

The issue of commercial success goes beyond whether project awards go to firms that then succeed in the market. It is possible that these firms may well have succeeded anyway, or they may simply have displaced other firms that would have succeeded had their rival not received a subsidy. The issue is whether SBIR increases the number of small businesses that succeed in the market. If the data permit, the study team may try to emulate the research of Feldman and Kelley to test the hypothesis that the SBIR increases/does not increase the number of small businesses that pursue their research projects or achieve other goals.46

Broad economic benefits

For firms

- Support for firm development, which may include:

- Creation of a firm (i.e., has SBIR led to the creation of a firm that otherwise would not have been founded)

- Survival

- Growth in size (employment, revenues)

- Merger activity

- Reputation

- Increase in stock value/IPO, etc.47

- Formation of collaborative arrangements to pursue commercialization, including pre-competitive R&D or a place in the supply chain

- Investment in plant (production capacity)

- Other pre-revenues activities aimed at commercialization, such as entry into regulatory pipeline and development of prototypes

- Access to capital

- Private capital

- From angel investors

- From venture capitalists

- Banks and commercial lenders

- Capital contributions from other firms

- Stock issue of the SBIR-recipient firm, e.g., initial public offerings (IPO)

- Subsequent (non-SBIR) funding procurement from government agencies

For agencies (Aside from mission support and procurement)

- Enhanced research efficiency

- Outcomes from SBIR vs. non-SBIR research

- Agency manager attitudes toward SBIR

For society at large

Social returns include private returns, agency returns, and spillover effects from research, development, and commercialization of new products, processes, and services associated with SBIR projects. It is difficult, if not impossible, to capture social returns fully, but an attempt will be made to capture at least part of the effects beyond those identified above including the following:

- Evidence of spillover effects

- Small business support:

- Small business share of agency R&D funding

- Survival rates for SBIR supported firms

- Growth and success measures for SBIR vs. non-SBIR firms

- Training:

- SBIR impact on entrepreneurial activity among scientists and engineers

- Management advice from Venture Capital firms

- Other training effects.

Non-economic benefits

Knowledge benefits

- Intellectual property

- Patents filed and granted

- Patent citations

- Litigation

- Non-intellectual property

- Journal articles and citations

- Human capital measures

Other non-economic benefits

Given the complexity of the NRC study, the Committee is unlikely to devote substantial resources to this area. However, some evidence about other non-economic benefits e.g., environmental or safety impacts may emerge from the case studies and interviews.

Trends in agency funding for small business

- Absolute SBIR funding levels

- SBIR vs. other agency extra mural research funding received by small businesses

- Agency funding for small business relative to overall sources of funding in the US economy

Best practices in SBIR funding

It will be important to analyze the categories below with respect to the size of the firm.

- Recipient views on process

- Management views on process

- Flexibility of process, e.g., award size

- Timeliness of application decision process

- Management actions on troubled projects

Possible independent variables: demographic characteristics

For all of the outcome metrics listed above, it will be important to capture a range of demographic variables that could become independent variables in empirical analyses.

Bias

What is the best way of assessing SBIR? One approach—utilized by many agencies when examining their SBIR programs—has been to highlight successful firms. Another approach has been to survey firms that have been funded under the SBIR program, asking such questions as whether the technologies funded were ever commercialized, the extent to which their development would have occurred without the public award, and how firms assessed their experiences with the program more generally. It is important to recognize and account for the biases that arise with these and other approaches. Some possible sources of bias are noted below48:

- Response bias—1: Many awardees may have a stake in the programs that have funded them, and consequently feel inclined to give favorable answers (i.e., that they have received benefits from the program and that commercialization would not have taken place without the awards). This may be a particular problem in the case of the SBIR initiative, since many small high-technology company executives have organized to lobby for its renewal.

- Response bias—2: Some firms may be unwilling to acknowledge that they received important benefits from participating in public programs, lest they attract unwelcome attention.

- Measurement bias: It may simply be very difficult to identify the marginal contribution of an SBIR award, which may be one of many sources of financing that a firm employed to develop a given technology.

- Selection bias. This source of bias concerns whether SBIR awards firms that already have the characteristics needed for a higher growth rate and survival, although the extent of this bias is likely overdrawn since an important role of SBIR is to telegraph information about firms to markets operating under conditions of imperfect information.49

- Management bias: information from agency managers, who must defend their SBIR management before the Congress, may be subject to bias in different ways.

- Size bias: The relationship between firm size and innovative activity is not clear from the academic literature.50 It is possible that some indexes will show large firms as more successful (publications and total patents for example) while others will show small firms as more successful (patents per employee for example.)

A complement of approaches will be developed to address the issue of bias. In addition to a survey of program managers, we intend to interview firms as well as agency officials, employ a range of metrics, and use a variety of methodologies.

The Committee is aware of the multiple challenges in reviewing “the value to the Federal research agencies of the research projects being conducted under the SBIR program…” [H.R. 5667, sec. 108]. These challenges stem from the fact that

- the agencies differ significantly by mission, R&D management structures (e.g., degree of centralization), and manner in which SBIR is employed (e.g., administration as grants vs. contracts); and

- different individuals within agencies have different perspectives regarding both the goals and the merits of SBIR-funded research.

The Committee proposes multiple approaches to assessing the contributions of the program to agency mission, in light of the complicating factors mentioned above:

- A planned survey of all individuals within studied agencies having SBIR program management responsibilities (that is, going beyond the single "Program Manager" in a given agency). The survey will be designed and implemented with the objective of minimizing framing bias. We will reduce sampling bias by soliciting responses from R&D managers without direct SBIR responsibilities as well as those who have both. Important areas of inquiry include study of the process by which topics are defined, solicitations developed, projects scored, and award selections made.

- Systematic gathering and critical analysis of the agencies' own data concerning take-up of the products of SBIR funded research.

- Study of the role of multiple-award-winning firms in performing agency relevant research;

- A possible study comparing funded and nearly funded projects at NIH (possibly extended to other agencies).

6. Existing Data Sources

Specific data requirements are driven by a study's methodology, and their definition would normally follow that discussion. As noted earlier, however, the status of prior agency studies and agency databases will affect the methodologies selected and hence data collection needs. The Committee will make an initial effort to identify and review existing data sources. These will be extended and undoubtedly modified during the early stages of Phase II of the NRC study.

In general, existing data will be extracted in the main from the following sources:

- Agency SBIR databases

- Published agency reports

- Internal agency analysis

- SBA and GAO reports

- Previously conducted recipient surveys

- Academic literature

- Prior NRC studies

These existing data sources are briefly discussed below.

Existing agency and SBA reports

The agencies appear to have produced few major reports on their own SBIR programs, aside from annual reports to SBA. In addition to Fast Track, DoD has unpublished studies; NASA recently completed some analysis; NSF also has some internal assessments. These agency reports must be assessed for accuracy and comprehensiveness, as an early-stage priority under Phase II of the NRC study.51Annex E provides a list of these agency studies

Existing agency SBIR databases

All five agencies maintain databases of awards and awardees. This information typically contains basic information about the awardee (e.g., company name, Principal Investigator, contact address), information about the award (amount, date, award number), and in many cases, additional detailed project information (e.g., proposal summary, commercialization prospects.)52

In general, the agency databases offer reasonably strong input data – award amounts, dates, Principal Investigator information etc. – and relatively weak output data – commercial impact etc. The agency databases may have information on modifications that have added funds, but do not typically contain sufficient information about the use of funds (The abstract, which may be useful for case study decisions, does not lend itself to statistical use since the sample size is one for each unique abstract.)53

Thus, the agency databases will be most useful as sources two critical sets of information:

- Basic information about awards, including some demographic data about awardees;

- Contact information for awardees, useful as the survey distribution lists are developed. More technically, issues related to agency databases may include:

- Completeness of the agency's data

- Do the data cover all of the applications received by the agency?

- Are all grants accounted for? Is the contact data up to date (i.e., what percentage respond to a contact effort based on this information)?

- What year was the database started?

- Does it maintain information about non-awardees?

- What percent of SBIR Phase I awards get converted to Phase II awards

- How many SBIR Phase II contracts lead to Phase III

- Accuracy of the data The biggest challenge here will be the transient nature of the firms and the information.

- PI's come and go; firms shrink and grow; firms are acquired; firms may close down, move, or change names;

- Answers often depend on whom you ask;

- Firms that are very successful may have new management in place as a result of venture capital activity or other financial arrangements, or due to firm acquisition by another organization;

- There is often a long gestation between award of the SBIR Phase II and achievement of significant revenues. Often other SBIR grants and other R&D may have occurred in the interval. There may not be anyone still at the firm knowledgeable of the link between the product and the SBIR;

- The most serious analytical issue may be the dependency on self-reporting, as the agencies generally know little about commercialization except that which is self-reported by the firm.

- Depth of the data – Does the data reach firm level variables, award data, projects, and outcomes? Conversely, what primary gaps in the data should be filled by primary research? The Committee will also need to assess data collected by agencies beyond that required by SBA, to see if there are opportunities and/or gaps.

- The expanded role of DoD data Recent DoD collections include information on projects in the earlier studies, as well as in the next Fast Track: about one-third of the DoD collection is on projects awarded by other agencies. Note that information from the various data collection has not been cross-referenced and analyzed. It will take extensive effort to properly identify each project in each collection (as the collections for example lack common unique identifiers)

- The form of the data – is the agency data in paper form or is it computerized?

Relevant Features of Existing Survey Data

Four substantial surveys have addressed commercial and other outcomes from SBIR: GAO (1992), DoD (1997), SBA (1999), and DoD Fast Track.54 In many areas, these surveys ask similar or identical questions, creating extensive databases of results relevant to many of the metrics being considered for use in this study.

The Fast Track surveys each addressed a single SBIR Phase II award, and collected some information on the firm. 80 to 90 percent of the questions were about the specific award. Some firms have only one award. Some have over 100. GAO (1992), SBA (1999) and DoD (1997) each surveyed 100 percent of the SBIR Phase II awards made from 1983 through an end date that was four years prior to the date of the survey: i.e., GAO (1992) surveyed, in 1991, all SBIR Phase II project awards from 1983 through 1987.55 These studies provide coverage for the early years of the program.

The existing survey results showed the distribution of commercialization to be quite skewed. For example, 868 of the 1310 reporting projects in the SBA survey had no sales. Fifty five had over $5 million in sales, one of which was over $240M, two were slightly over $100M, and five were between $46 M and $60M. Those 55 projects represent 1.5 percent of the number surveyed, 4.2 percent of the responses, but 76 percent of the total sales. This means that in collecting commercialization data, firm selection becomes critically important. Surveying a high percentage of the awards (using a long survey) has the related problems of imposing a substantial burden, and risks causing multiple award winners not to respond.56

A note on the SBA Tech-Net database: SBA maintains a database of information derived from the annual reports made on SBIR by the agencies.57 Mandatory collected data includes award year and amount, agency topic number, awarding agency, phase, title, and agency tracking number. (Tracking numbers were not mandatory through 1998.)

However, this database is far from complete for our purposes:

- Principal Investigator (PI) Information Today, reporting the PI name is mandatory, but although there are fields for title, email address, and phone, these are not mandatory entries for the agencies to report. As recently as 1998, agencies did not have to report the name of the PI.

- Company information There are fields for the name, title, phone, and email of a company contact official, but these fields are not mandatory for the agencies to report.

- Award information Agency award contract or grant number, solicitation number, year of solicitation and number of employees have fields, but they are not mandatory.

- Technical project information. There are large fields for technical abstract, project anticipated results, and project comments, but they are not mandatory.

- Women and minorities Although information is mandatory on minority or women owned, it was not complete in the SBA data for the years before 1993.58

- Other data.Other data, such as award date for SBIR Phase I and Phase II, completion date for each phase, additional (non SBIR Phase II) and subsequent funding provided by the agencies, agency POC for each SBIR Phase II, information on cost sharing (if applicable), etc. may be available in some agency data bases.

7. Methodology Development: Primary Research

The wide scope of the current study and gaps in the existing data will necessitate a considerable amount of primary research. The approach adopted is to select the methodological elements best suited to complement and supplement existing information. The study objectives will be realized using the most efficient combination of methods.59 These include analyzing existing studies and databases, interviewing program officials, surveying various program and technical managers and project participants, carrying out case studies, using control groups and counterfactual approaches to isolate the effects of the SBIR program, and other methods such as econometric, sociometric, and bibliometric analysis. These tools will be used on an as needed, limited basis to address questions for which they are best suited.60

A dictionary of variable names with definitions that are common across all of the instruments will be developed. This dictionary will form a part of the training materials used by interviewers, survey managers, and those populating variables with administrative data.

Surveys

Surveys are an important methodological element of the study.

Program staff will be interviewed, with these interviews focusing (at least initially) on process issues – mechanisms, selection procedures, etc. - and on the contribution of the program to the agency. This will include understanding the motivations and objectives of the program managers. What are their goals and incentives? How is their performance within the agency SBIR program judged? Development of a core questionnaire and also a basic reporting template may be appropriate even though interviews with more senior program managers are likely to be free ranging with many open-ended questions and also more agency specific than those with participants. A core template with five derivative templates (one for each of the five agencies identified in the legislation) seems a promising approach. Higher-level research officials, such as deputy institute directors, may be interviewed about the SBIR in comparison with other research support by the agency.

SBIR award recipients will also be surveyed. The key issue here will be to identify the correct respondent, one who both knows the answers and is willing to fill out the instrument. The survey will begin by contacting those already in the database of firm information, which covers all applicants for SBIR Phase I or Phase II grants.61 The database includes the name of the SBIR Point of Contact (POC) for that firm (along with phone, address, and email). In fact, the database covers many firms that have also received awards from NSF, NASA, and DoE. Most NIH awardees do not submit proposals to these agencies and, therefore, are not covered. Surveys will be field-tested ensure that they are effective and encourage compliance.

The first step will be to develop a short survey to cover those firms lacking a point of contact (POC). This survey will ask for information about the POC and solicit information on a very small set of firm-related questions. This will facilitate development of a comprehensive database of POC's.

Subsequent recipient surveys will be directed to these POC's, although it is likely that certain information will require responses at the corporate level of the firm, and at the level of the primary investigator (PI).

The following questionnaires and surveys will likely be administered:

- Survey of program managers, focusing on major strategic questions and overall program issues and concerns;

- Survey of technical managers focusing on operations and issues of program implementation;

- Survey of SBIR Phase II participants, focusing both on outcomes from SBIR grants (especially commercial outcomes) and on program management issues from the recipient perspective. This survey is likely to have both a general and an agency-specific component. It is also likely to have a section focused on company impacts (as opposed to project impacts);

- Survey of SBIR Phase I participants, focusing on initial selection and support issues;

- Additional limited surveys focusing on particular aspects of the program, possibly at specific agencies, can be initiated, with limiting parameters to be specified.

Each of the survey instruments will have a stated purpose and each will be “mapable” to the objectives of the study to which they relate.62 All surveys will be pre-tested. These surveys are discussed in more detail below.

Program manager survey

The program manager survey will focus on strategic management issues and on manager views of the program. It will be designed to capture senior agency views on the operations of the SBIR program focused on concerns such as funding amounts and flexibility, outreach, topic development, top-level agency support for SBIR, and evaluation strategies.

The survey may be administered through face-to-face interviews with senior managers, by telephone, by mail, via electronic questionnaire, or through some combination or these approaches. All senior program managers at the agency and all program managers at the sub-unit level (e.g., NIH institutes, DoD agencies) are to be covered. Altogether, there are approximately 45 program managers at this level in the five study agencies.

Technical manager survey

While program managers should have a strategic view of the SBIR program at their agency, the program is to a considerable extent operated by other managers. The responsibilities of these technical managers (or TMs) are focused on the development of appropriate topics, appointment of selection panels, process management (e.g., ensuring that reviews are received on time and that the selection and management process meets approved timelines), and contacts with the grant recipients themselves.

The Committee plans to conduct informal interviews with selected TMs. In addition, a survey instrument is currently being designed which will be sent to each TM in each agency. This instrument will address technical management issues, and will focus on the relationship between SBIR projects and non-SBIR components of each agency's research and development program. TMs, for example, may play a pivotal role in the subsequent take-up of SBIR-funded research within DoD, and the survey is aimed at enhancing assessment of that possibility.

The survey will therefore be delivered to all TMs in the five agencies. Approximately 200-300 potential survey recipients are anticipated.

SBIR Phase I recipient survey

In order to identify characteristics of firms and projects that received SBIR Phase I awards only, the Committee anticipates the implementation of a survey of SBIR Phase I recipients. The objective of this survey is to enhance understanding about project outcomes, and to identify possible weaknesses in the SBIR Phase I—Phase II transition that may have excluded worthy projects from SBIR Phase II funding. (It should be understood that the Committee has no preconceptions on this issue—only that this is an important transition point and winnowing mechanism in SBIR, and should therefore be reviewed.)

As there have been more than 40,000 SBIR Phase I grants made, it is not feasible to cover all SBIR Phase I winners. Therefore, the Committee will developed an initial set of selection criteria, aimed at ensuring that outcomes are assessed for a range of potential independent variables. These will include:

- Size of firm

- Geographic location

- Women and minority ownership

- Agency

- Multiple vs. single award winners

- Industry sector

SBIR Phase II recipient surveys

The SBIR Phase II recipient survey will be a central component of the research methodology. It will address commercial outcomes, process issues, and post-SBIR concerns about subsequent support for successful companies. Surveys must provide data that will allow the Committee to address the various questions defined in sections 3 and 4. Specifically, survey methodologies will need to differentiate between:

- Funded and unfunded applications

- Women led/minority led businesses

- Different geographical regions or perhaps clusters of zip codes

- SBIR Phase I vs. Phase II awards

- Firms by size: single-person companies vs. micro corporations vs. relatively large established companies (100+ employees?). 63

- Firms by total revenues and by revenues attributable to the SBIR-related commercialization

- Firms by employment effects

- Recipients of single vs. multiple awards

- Other criteria, including the procedural efficiency of converting from Phase I to Phase II

The Committee is also interested in finding relevant points of comparison between research quality and research value. However, such comparisons are complicated because SBIR and non-SBIR funding is differentiated not only by the size of the firm but also by the kind of research, by funding rationale, and by time horizon. For example, NSF views SBIR as a tool for funding research that leads to commercialization, while the remaining 97.5 percent of NSF funding is for non-commercial research. Here, a comparison would be inappropriate. In addition, non-SBIR grants operate under different timeframes and are usually at a different phase of the R&D cycle, requiring different resource commitments.

To address this point, the Committee will consider if the Phase II survey should be expanded to identify awards that have received some form of quality recognition from and outside agency. For example, if the only competitors for such recognition are other SBIR projects, (as is the case with the Tibbetts Award) this may identify the best SBIR projects but say little about comparisons to non-SBIR projects.

All of these data will be collected on an agency-by-agency basis, to ensure sufficient data for the statistical analysis of each agency. The result will be a survey matrix, with an x-axis showing potential explanatory variables such as multiple- vs. single-award winners, and the y-axis showing the individual agencies.64 (Each cell of the matrix is important to the extent that the specified data help to address study objectives. Detailed articulation between objectives and survey instruments will be an early stage task for SBIR Phase II. See Annex F for a prototype of this matrix.

Background

Award numbers. Although data inconsistencies mean that the number of SBIR Phase II awards from 1992 – 2000 is not known exactly, it is estimated that this number is at about 10,800. Based on the three published reports, about 7 percent of these SBIR Phase II awards are from the smaller agencies. Thus, it is estimated that about 10,000 awards have been made by the five study agencies. There are no good data concerning the distribution by firm (some firms have received more than 100 awards, many others just one).

Existing Commercialization Data DoD has data by project for 10,372 SBIR Phase II projects. (This includes projects from 1983). Since 1999, firms who have submitted SBIR or STTR proposals to DoD have had to enter firm information and information on sales and investments for all of the SBIR Phase II awards they have received, regardless of awarding agency.

The DoD commercialization database contains information on approximately 75 percent of DoD Phase II awards from 1992 to 2000, 67 percent of NASA and DoE awards, 54 percent of NSF awards, and 16 percent of NIH/HHS awards. DoE has provided commercialization data by product, which cannot be directly associated to projects as this may lead to a double counting of awards to firms. NASA does have data by project, although this does not appear to correspond directly to DoD data.

Sampling Approaches and Issues

The question of sampling is of central importance here, and a more extended discussion of the issues raised can be found in Annex G.

The Committee proposes to use an array of sampling techniques, to ensure that sufficient projects are surveyed to address a wide range of both outcomes and potential explanatory variables, and also to address the problem of skew noted earlier.

- Random Sample. After integrating the 10,000 awards into a single database, a random sample of approximately 20 percent will be sampled for each year; e.g., 20 percent of the 1992 awards. Generating the total sample one year at a time will allow improved access to changes in the program over time, as otherwise the increased number of awards made in recent years could dominate the sample.

- Random sample by agency. Surveyed awards will then be grouped by agency; additional respondents will be randomly selected as required to ensure that at least 20 percent of each agency's awards were included in the sample.

- Top Performers. In addition to the random sample, the problem of skew will be dealt with by ensuring that all projects meeting a specific commercialization threshold will be surveyed—most likely $5 million in sales or $5 million in additional investment (derived from the commercialization database). Estimates from current DoD commercialization data indicate that the “top performer” part of the survey would cover approximately 385 projects.

- Firm surveys: 100 percent of the projects that went to firms with only one or two awards will be polled—these are estimated at approximately 30 percent of the 10,000 SBIR Phase II awards, based on data from 1983 to 1993. These are the hardest firms to find: address information is highly perishable, so response rates are much lower.

- Coding The project database will track which survey corresponds with each response. For example, it is possible for a randomly sampled project from a firm that had only two awards to be a top performer. Thus, the response could be coded as a random sample for the program, a random sample for the awarding agency, a top performer, and as part of the sample of single or double winners. In addition, the database will code the response for the array of potential explanatory or demographic variables listed earlier.

- Total number of surveys: With the random sample set at 20 percent, the approach described above will generate approximately 5500 project surveys, and approximately 3000 firm surveys (assuming that each firm receiving at least one project survey also received a firm survey). Although this approach samples more than 50 percent of the awards, multiple award winners would be asked to respond to surveys covering about 20 percent of their projects.

Projected response rates. The response rate is expected to be highly variable. It will depends partly on the quality of the address information, which is itself a function of the effort expended on address collection and verification before surveys are administered, and partly on the extent of follow up of non-respondents. The latter is especially important: one agency manager noted that his survey had a final response rate of 70-80 percent, but that the initial rate before follow-up phone calls was approximately 15 percent.

As noted in Siegel, Waldman, and Youngdahl (1997), response rates to technology surveys are notoriously low, averaging somewhere in the teens. Thus, a 20 percent response rate for a technology survey can be considered high, especially if it involves sampling small firms, and there is potential attrition in the sample through exits or mergers and acquisitions.

The NRC surveys are expected to exceed this benchmark for two reasons.

- Experience: The NRC has assembled expertise with an excellent track record of effective sampling of firms. Previous survey work for the Department of Defense SBIR Fast Track survey yielded a response rate of 68 percent.

- Stewardship: Substantial time and effort will be devoted to following up the survey with phone calls to non-respondents and those that provide incomplete information.

While the NRC study expects a significant response rate, based on the same techniques as have proved successful in the past, it is inherently difficult to predict the precise size of the actual result.

Starting date and coverage

Surveys administered in 2004 will cover SBIR awards through 2000. 1992 is a realistic starting date for the coverage, allowing inclusion of the same projects as DoD for 1991 and 1992, and the same as SBA for 1991, 1992, and 1993. This would add to the longitudinal capacities of the study.

Projects awarded earlier than 1992 suffer from potentially irredeemable data loss: firms and PI's are no longer in place, and data collected at the time was very limited.

Delivery modalities

Possible delivery modalities for surveys will include:

- Online

- By phone

- By mail

- In person (interviews or focus groups)

Clearly, there are many advantages to online surveys (such as cost, speed, possibly response rates), and such surveys can now be created at minimal cost using third party services. Response rates become clear fairly quickly, and can rapidly indicate needed follow up for non-respondents. Clarifications of inconsistent responses are also easier using online collection. Finally, online surveys allow dynamic branching of question sets, with some respondents answering selected sub-sets of questions but not others, depending on prior responses.

There are also some potential advantages to traditional paper surveys. Paper surveys may be easier to circulate, allowing those responsible at a firm to answer relevant parts of the questionnaire. Firms with multiple SBIR grants also often seek to exercise some quality control over their responses; after assigning surveys to different people, answers may be centrally reviewed for consistency.

It may be appropriate to consider a phased approach to the survey work, with more expensive approaches (e.g. phone solicitation) supplementing email, specifically aiming to ensure appropriate coverage of the various groups outlined above.

Case study method

Case studies will be another central component of the study. Second- and third-level benefits in particular will be addressed primarily through focused case studies, as will information about the procurement needs of Federal agencies.69

Research objectives addressed primarily through case studies may include:

- generating detailed data not accessible through surveys

- pursuing lines of inquiry suggested by surveys

- identifying anecdotes that illuminate findings that are more general.