NCBI Bookshelf. A service of the National Library of Medicine, National Institutes of Health.

Hughes RG, editor. Patient Safety and Quality: An Evidence-Based Handbook for Nurses. Rockville (MD): Agency for Healthcare Research and Quality (US); 2008 Apr.

Introduction

In addition to putting the spotlight on the staggering numbers of Americans that die each year as a result of preventable medical error, the Institute of Medicine’s (IOM’s) seminal report, To Err is Human: Building a Safer Health System, repeatedly underscored the message that the majority of the factors that give rise to preventable adverse events are systemic; that is, they are not the result of poorly performing individual nurses, physicians, or other providers.1 Although it was not the intent of To Err is Human to treat systems thinking and human factors principles in great detail, it cited the work of many prominent human factors investigators and pointed out the impressive safety gains made in other high-risk industries such as aviation, chemical processing, and nuclear power. One of the beneficial consequences of the report is that it exposed a wide audience of health services researchers and practitioners to systems and human factors concepts to which they might not otherwise have been exposed. Similarly, the report brought to the attention of the human factors community serious health care problems that it could address. Today, both health care and human factors practitioners are venturing beyond their own traditional boundaries, working together in teams, and are benefiting from the sharing of new perspectives and clinical knowledge. The purpose of the present chapter is to further this collaboration between health care and human factors, especially as it is relevant to nursing, and continue the dialog on the interdependent system factors that underlie patient safety.

Human Factors—What Is It?

The study of human factors has traditionally focused on human beings and how we interact with products, devices, procedures, work spaces, and the environments encountered at work and in daily living.2 Most individuals have encountered a product or piece of equipment or a work environment that leads to less than optimal human performance. If human strengths and limitations are not taken into account in the design process, devices can be confusing or difficult to use, unsafe, or inefficient. Work environments can be disruptive, stressful, and lead to unnecessary fatigue. For those who like comprehensive, formal definitions, consider the following, adapted from Chapanis and colleagues:3

Human factors research discovers and applies information about human behavior, abilities, limitations, and other characteristics to the design of tools, machines, systems, tasks, and jobs, and environments for productive, safe, comfortable, and effective human use.

This definition can be simplified as follows:

Human factors research applies knowledge about human strengths and limitations to the design of interactive systems of people, equipment, and their environment to ensure their effectiveness, safety, and ease of use.

Such a definition means that the tasks that nurses perform, the technology they are called upon to use, the work environment in which they function, and the organizational policies that shape their activities may or may not be a good fit for their strengths and limitations. When these system factors and the sensory, behavioral, and cognitive characteristics of providers are poorly matched, substandard outcomes frequently occur with respect to effort expended, quality of care, job satisfaction, and perhaps most important, the safety of patients.

Many nursing work processes have evolved as a result of local practice or personal preference rather than through a systematic approach of designing a system that leads to fewer errors and greater efficiency. Far too often, providers and administrators have fallen into a “status quo trap,” doing things simply because they always have been done that way. Human factors practitioners, on the other hand, take into account human strengths and weaknesses in the design of systems, emphasizing the importance of avoiding reliance on memory, vigilance, and followup intentions—areas where human performance is less reliable. Key processes can be simplified and standardized, which leads to less confusion, gains in efficiency, and fewer errors. When care processes become standardized, nurses have more time to attend to individual patients’ specialized needs, which typically are not subject to standardization. When medical devices and new technology are designed with the end user in mind, ease of use and error detection or preventability are possible, in contrast to many current “opaque” computer-controlled devices that prevent the provider from understanding their full functionality.

The field of human factors does not focus solely on devices and technology. Although human factors research emerged during World War II as a result of equipment displays and controls that were not well suited to the visual and motor abilities of human operators, each subsequent decade of human factors work has witnessed a broadening of the human performance issues considered worthy of investigation. More recently, a number of human factors investigators with interests in health care quality and safety advocated addressing a more comprehensive range of sociotechnical system factors, including not only patients, providers, the tasks performed, and teamwork, but also work environments or microsystems, organizational and management issues, and socioeconomic factors external to the institution.4–7 One of the lessons stemming from a systems approach is that significant improvements in quality and safety are likely to be best achieved by attending to and correcting the misalignments among these interdependent levels of care. Managing the system interdependencies of care, as evidenced by continued major breakdowns such as inadequate transitions of patient care, is a major challenge faced by providers and their human factors partners alike.

Understanding Systems

At a very basic level, a system is simply a set of interdependent components interacting to achieve a common specified goal. Systems are such a ubiquitous part of our lives that we often fail to recognize that we are active participants in many systems throughout the day. When we get up in the morning, we are dependent on our household systems (e.g., plumbing, lighting, ventilation) to function smoothly; when we send our children off to school, we are participants in the school system; and when we get on the highway and commute to work, we are participants (and sometimes victims) of our transportation system. At work, we find ourselves engaged simultaneously in several systems at different levels. We might report to work in a somewhat self-contained setting such as the intensive care unit (ICU) or operating room (OR)—what human factors practitioners refer to as microsystems—yet the larger system is the hospital itself, which, in turn, is likely to be just one facility in yet a larger health care system or network, which in itself is just one of the threads that make up the fabric of our broader and quite diffuse national health care system. The key point is that we need to recognize and understand the functioning of the many systems that we are part of and how policies and actions in one part of the overall system can impact the safety, quality, and efficiency of other parts of the system.

Systems thinking has not come naturally to health care professionals.8 Although health care providers work together, they are trained in separate disciplines where the primary emphasis is the mastery of the skills and knowledge to diagnose ailments and render care. In the pursuit of becoming as knowledgeable and skillful as possible in their individual disciplines, a challenge facing nursing, medicine, and the other care specialties is to be aware of the reality that they are but one component of a very intricate and fragmented web of interacting subsystems of care where no single person or entity is in charge. This is how the authors of To Err is Human defined our health system:1

Health care is composed of a large set of interacting systems—paramedic, and emergency, ambulatory, impatient care, and home health care; testing imaging laboratories; pharmacies; and so forth—that are coupled in loosely connected but intricate network of individuals, teams, procedures, regulations, communications, equipment, and devices that function with diffused management in a variable and uncertain environment. Physicians in community practice may be so tenuously connected that they do not even view themselves as part of the system of care.

A well-known expression in patient safety is that each system is perfectly designed to achieve exactly the results that it gets. It was made popular by a highly respected physician, Donald Berwick of the Institute of Healthcare Improvement, who understands the nature of systems. If we reap what we sow, as the expression connotes, and given that one does not have to be a systems engineer to understand systems, it makes sense for all providers to understand the workings of the systems of which they are a part. It is unfortunate that today one can receive an otherwise superb nursing or medical education and still receive very little instruction on the nature of systems that will shape and influence every moment of a provider’s working life.

Sociotechnical System Models

With a systems perspective, the focus is on the interactions or interdependencies among the components and not just the components themselves. Several investigators have proposed slightly different models of important interrelated system factors, but they all seem to start with individual tasks performed at the point of patient care and then progressively expand to encompass other factors at higher organizational levels. Table 1 shows the similarity among three of these models. In an examination of system factors in the radiation oncology therapy environment, Henriksen and colleagues4 examined the role of individual characteristics of providers (e.g., skills, knowledge, experience); the nature of the work performed (e.g., competing tasks, procedures/practices, patient load, complexity of treatment); the physical environment (e.g., lighting, noise, temperature, workplace layout, distractions); the human-system interfaces (e.g., equipment location, controls and displays, software, patient charts); the organizational/social environment (e.g., organizational climate, group norms, morale, communication); and management (e.g., staffing, organization structure, production schedule, resource availability, and commitment to quality). Vincent and colleagues5 also proposed a hierarchical framework of factors influencing clinical practice that included patient characteristics, task factors, individual (staff) factors, team factors, work environment, and organizational and management factors. Carayon and Smith6 proposed a work system model that is a collection of interacting subsystems made up of people (disciplines) performing tasks using various tools and technology within a physical environment in pursuit of organizational goals that serve as inputs to care processes and ultimately to outcomes for patients, providers, and the organization alike. The similarity among these independently derived models is quite striking, in that they are all sociotechnical system models involving technical, environmental, and social components.

Human Error—A Troublesome Term

While one frequently finds references to human error in the mass media, the term has actually fallen into disfavor among many patient safety researchers. The reasons are fairly straightforward. The term lacks explanatory power by not explaining anything other than a human was involved in the mishap. Too often the term ‘human error’ connotes blame and a search for the guilty culprits, suggesting some sort of human deficiency or lack of attentiveness. When human error is viewed as a cause rather than a consequence, it serves as a cloak for our ignorance. By serving as an end point rather than a starting point, it retards further understanding. It is essential to recognize that errors or preventable adverse events are simply the symptoms or indicators that there are defects elsewhere in the system and not the defects themselves. In other words, the error is just the tip of the iceberg; it’s what lies underneath that we need to worry about. When serious investigations of preventable adverse events are undertaken, the error serves as simply the starting point for a more careful examination of the contributing system defects that led to the error. However, a very common but misdirected response to managing error is to “put out the fire,” identify the individuals involved, determine their culpability, schedule them for retraining or disciplinary action, introduce new procedures or retrofixes, and issue proclamations for greater vigilance. An approach aimed at the individual is the equivalent of swatting individual mosquitoes rather than draining the swamp to address the source of the problem.

A disturbing quality in many investigations of preventable adverse events is the hidden role that human bias can play. Despite the best of intentions, humans do not always make fair and impartial assessments of events and other people. A good example is hindsight bias.9–12 As noted by Reason,10 the most significant psychological difference between individuals who were involved in events leading up to a mishap and those who are called upon to investigate it after it has occurred is knowledge of the outcome. Investigators have the luxury of hindsight in knowing how things are going to turn out; nurses, physicians, and technicians at the sharp end do not. With knowledge of the outcome, hindsight bias is the exaggerated extent to which individuals indicate they could have predicted the event before it occurred. Given the advantage of a known outcome, what would have been a bewildering array of nonconvergent events becomes assimilated into a coherent causal framework for making sense out of what happened. If investigations of adverse events are to be fair and yield new knowledge, greater focus and attention need to be directed at the precursory and antecedent circumstances that existed for sharp end personnel before the mishap occurred. The point of investigating preventable adverse health care events is primarily to make sense of the factors that contribute to the omissions and misdirected actions when they occur.11, 12 This in no way denies the fact that well-intended providers do things that inflict harm on patients, nor does it lessen individual accountability. Quite simply, one has to look closely at the factors contributing to the adverse event and not just the most immediate individual involved.

In addition to hindsight bias, investigations of accidents are also susceptible to what social psychologists have termed the attribution error.13 Human observers or investigators tend to make a fundamental error when they set out to determine the causal factors of someone’s mistake. Rather than giving careful consideration to the prevailing situational and organizational factors that are present when misfortune befalls someone else, the observer tends to make dispositional attributions and views the mishap as evidence of some inherent character flaw or defect in the individual. For example, a nurse who administers the wrong medication to an emergency department (ED) patient at the end of a 10-hour shift may be judged by peers and the public as negligent or incompetent. On the other hand, when misfortune befalls individuals themselves, they are more likely to attribute the cause to situational or contextual factors rather than dispositional ones. To continue with the example, the nurse who actually administered incorrect medication in the ED may attribute the cause to the stressful and hurried work environment, the physician’s messily scribbled prescription, or fatigue after 10 intense hours of work.

Pragmatic and System Characteristics

Rasmussen14 points out the arbitrary and somewhat pragmatic aspects of investigations of human error and system performance. When system performance is below some specified standard, an effort is made to back-track the chain of events and circumstances to find the causes. How far back to go or when to stop are open questions, the answers to which are likely to vary among different investigators. One could stop at the provider’s actions and claim medical error, or one could seek to identify other reasons—poor communication, confusing equipment interfaces, lack of standardized procedures, interruptions in the care environment, diffusion of responsibility, management neglect—that may have served as contributing factors. Rasmussen notes that the search for causes will stop when one comes across one or more factors that are familiar (that will therefore serve as acceptable explanations) and for which there are available corrections or cures. Since there is no well-defined start point to which one is progressively working backward through the causal chain, how far back one is willing to search is likely to depend on pragmatic considerations such as resources, time constrains, and internal political ramifications. Rasmussen also observes that some human actions become classified as human error simply because they are performed in unkind work environments; that is, work environments where there is not much tolerance for individual experimentation and where it is not possible for individuals to correct inappropriate actions before they lead to undesirable consequences. In some unkind environments, it may not be possible to reverse the inappropriate actions, while in others it may not be possible to foresee the undesirable consequences. Rasmussen’s unkind work environment is quite similar to Perrow’s notion of tightness of coupling in complex systems.15

Perrow’s analysis of system disasters in high-risk industries shifts the burden of responsibility from the front-line operator of the system to actual properties of the system. Using the concepts of tightness of coupling and interactive complexity, Perrow focuses on the inherent characteristics of systems that make some industries more prone to accidents.15 Tightness of coupling refers to dependencies among operational sequences that are relatively intolerant of delays and deviations, while interactive complexity refers to the number of ways system components (i.e., equipment, procedures, people) can interact, especially unexpectedly. It is the multiple and unexpected interactions of malfunctioning parts, inadequate procedures, and unanticipated actions—each innocuous by themselves—in tightly coupled systems that give rise to accidents. Such accidents are rare but inevitable, even “normal,” to use Perrow’s terminology. By understanding the special characteristics of high-risk systems, decisionmakers might be able to avoid blaming the wrong components of the system and also refrain from technological fixes that serve only to make the system riskier.

A Human Factors Framework

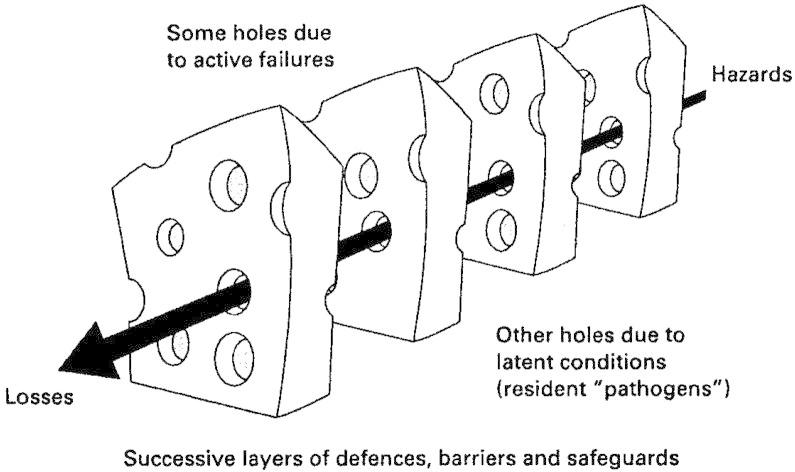

Figure 1 shows many of the components or major factors that need to be addressed to gain a better understanding of the nature of preventable adverse events. What the figure does not portray very well is the way in which these major factors can interact with one another. A basic tenet of any systems approach to adverse events is that changes in one part of the system will surely have repercussions on another part of the system. Hence, it is important to focus on the way these components can interact and influence one another and not just the components themselves. When these components are functioning well together, they serve collectively as a set of barriers or system of defenses to the occurrence of preventable adverse events. However, it is when weaknesses or vulnerabilities exist within these components and they interact or align themselves in such a way that the weaknesses overlap that preventable adverse events occur. This way of describing “holes” that exist in the successive components or layers of defenses has more light-heartedly been dubbed the “Swiss cheese” model of accident causation, made popular by James Reason, a prominent British psychologist who has dramatically influenced the way we think about patient safety.16 Figure 2 shows the Swiss cheese model of accident causation and how the trajectory of hazards can result in losses or adverse events.

In brief, many adverse events result from this unique interaction or alignment of several necessary but singly insufficient factors. Weaknesses in these factors typically are present in the system long before the occurrence of an adverse event. All that is needed is for a sufficient number to become aligned for a serious adverse event to occur.

The distinction made by Reason between latent conditions and active errors, shown along the left margin of Figure 1, also is very important.11, 17 In health care, active errors are committed by those providers (e.g., nurses, physicians, technicians) who are in the middle of the action, responding to patient needs at the sharp end.18 Latent conditions are the potential contributing factors that are hidden and lie dormant in the health care delivery system, occurring upstream at the more remote tiers, far removed from the active end. These latent conditions—more organizational, contextual, and diffuse in nature or design related—have been dubbed the blunt end.18 The distinction between latent conditions and active errors is important because it allows us to clearly see that nurses, who have the greatest degree of patient contact, are actually the last line of defense against medical error (and hence the most vulnerable). As such, nurses can inherit the less recognized sins of omission and commission of everyone else who has played a role in the design of the health care delivery system. Reason perhaps makes this point best:10

Rather than being the main instigators of an accident, operators tend to be inheritors of system defects created by poor design, incorrect installation, faulty maintenance and bad management decisions. Their part is usually that of adding a final garnish to a lethal brew whose ingredients have already been long in the cooking.

The human factors framework outlined here allows us to examine a wide range of latent conditions that are part of the health care sociotechnical system in which providers reside.

Individual Characteristics

Figure 1 identifies individual characteristics as a first-tier factor that has a direct impact on provider performance and whether that performance is likely to be considered acceptable or substandard. Individual characteristics include all the qualities that individuals bring with them to the job—things such as knowledge, skill level, experience, intelligence, sensory capabilities, training and education, and even organismic and attitudinal states such as alertness, fatigue, and motivation, just to mention a few. The knowledge and skills that health care providers develop prior to employment through accredited training programs is fundamental to their ability to perform their work. At the same time, organismic factors such as fatigue resulting from long hours and stress can influence the ability of providers to apply their specialized knowledge optimally. Communication ability and cultural competency skills should also be included at this level. Fortunately, few critics would argue that the skills and abilities mentioned here are unimportant in having an impact on optimal health care delivery and outcomes.

The Nature of the Work

The second-tier factor in Figure 1, the nature of the work, refers to characteristics of the work itself and includes the extent to which well-defined procedures are utilized, the nature of the workflow, peak and nonpeak patient loads, the presence or absence of teamwork, the complexity of treatments, equipment functioning and downtime, interruptions and competing tasks, and the physical/cognitive requirements for performing the work. Although empirical studies on the impact of these work-related factors in health care settings are not as plentiful as they are in the human factors literature, they indeed exist. For example, a review of the external beam radiation therapy literature19 found fewer treatment administration errors when therapists worked in pairs20 and greater numbers of treatment administration errors at the higher patient census levels.21 If management becomes overly ambitious in directing a high volume of patients to be treated in a fixed period of time, the consequence for radiation therapists is a high-pressure work environment and an increase in the number of adverse events. With respect to the human factors literature, there is an abundance of research on the effects of work-related factors on human performance drawn largely from defense-related operations and that of other highly hazardous industries where proficient human performance plays a critical role.22–25

Human-System Interfaces

The human-system interface refers to the manner in which two subsystems— typically human and equipment—interact or communicate within the boundaries of the system. This is shown as a third-tier factor in Figure 1. Nurses use medical devices and equipment extensively and thus have plentiful first-hand experience with the poor fit that frequently exists between the design of the devices’ controls and displays and the capabilities and knowledge of users. One approach for investigating the mismatches between devices and people is to recognize there is an expanding progression of interfaces in health care settings, each with their own vulnerabilities and opportunities for confusion.26, 27 Starting at the very center with the patient, a patient-device interface needs to be recognized. Does the device or accessory attachment need to be fitted or adapted to the patient? What physical, cognitive, and affective characteristics of the patient need to be taken into account in the design and use of the device? What sort of understanding does the patient need to have of device operation and monitoring? With the increasing migration of sophisticated devices into the home as a result of strong economic pressures to move patients out of hospitals as soon as possible, safe home care device use becomes a serious challenge, especially with elderly patients with comorbidities who may be leaving the hospital sicker as a result of shorter stays, and where the suitability of the home environment may be called into question (e.g., home caregivers are also likely to be aged, and the immediate home environment layout may not be conducive to device use). In brief, the role of the patient in relation to the device and its immediate environment necessitates careful examination. At the same time, the migration of devices into the home nicely illustrates the convergence of several system factors—health care economics, shifting demographics, acute and chronic needs of patients, competency of home caregivers, supportiveness of home environments for device use—that in their collective interactivity and complexity can bring about threats to patient safety and quality of care.

Providers of care are subject to a similar set of device use issues. Human factors practitioners who focus on the provider (user)–device interface are concerned about the provider’s ability to operate, maintain, and understand the overall functionality of the device, as well as its connections and functionality in relation to other system components. In addition to controls and displays that need to be designed with human motor and sensory capabilities in mind, the device needs to be designed in a way that enables the nurse or physician to quickly determine the state of the device. Increasing miniaturization of computer-controlled devices has increased their quality but can leave providers with a limited understanding of the full functionality of the device. With a poor understanding of device functionality, providers are at a further loss when the device malfunctions and when swift decisive action may be critical for patient care. The design challenge is in creating provider-device interfaces that facilitate the formation of appropriate mental models of device functioning and that encourage meaningful dialogue and sharing of tasks between user and device. Providers also have a role in voicing their concerns regarding poorly designed devices to their managers, purchasing officers, and to manufacturers.

The next interface level in our progression of interfaces is the microsystem-device interface. At the microsystem level (i.e., contained organizational units such as EDs and ICUs), it is recognized that medical equipment and devices frequently do not exist in stand-alone form but are tied into and coupled with other components and accessories that collectively are intended to function as a seamless, integrated system. Providers, on the other hand, are quick to remind us that this is frequently not the case, given the amount of time they spend looking for appropriate cables, lines, connectors, and other accessories. In many ORs and ICUs, there is an eclectic mix of monitoring systems from different vendors that interface with various devices that increases the cognitive workload placed on provider personnel. Another microsystem interface problem, as evidenced by several alerts from health safety organizations, are medical gas mix-ups, where nitrogen and carbon dioxide have been mistakenly connected to the oxygen supply system. Gas system safeguards using incompatible connectors have been overridden with adapters and other retrofitted connections. The lesson for providers here is to be mindful that the very need for an adaptor is a warning signal that a connection is being sought that may not be intended by the device manufacturer and that may be incorrect and harmful.28

Yet other device-related concerns are sociotechnical in nature, and hence we refer to a sociotechnical-device interface. How well are the technical requirements for operating and maintaining the device supported by the physical and socio-organizational environment of the user? Are the facilities and workspaces where the device is used adequate? Are quality assurance procedures in place that ensure proper operation and maintenance of the device? What sort of training do providers receive in device operation before using the device with patients? Are chief operating officers and nurse managers committed to safe device use as an integral component of patient safety? As health information technology (HIT) plays an increasing role in efforts to improve patient safety and quality of care, greater scrutiny needs to be directed at discerning the optimal and less-than-optimal conditions in the sociotechnical environment for the intelligent and proper use of these devices and technologies.

The Physical Environment

The benefits of a physical work environment that is purposefully designed for the nature of the work that is performed have been well understood in other high-risk industries for a number of years. More recently, the health care profession has begun to appreciate the relationship between the physical environment (e.g., design of jobs, equipment, and physical layout) and employee performance (e.g., efficiency, reduction of error, and job satisfaction). The third tier in Figure 1 also emphasizes the importance of the physical environment in health care delivery.

There is a growing evidence base from health care architecture, interior design, and environmental and human factors engineering that supports the assertion that safety and quality of care can be designed into the physical construction of facilities. An extensive review by Ulrich and colleagues29 found more than 600 studies that demonstrated the impact of the design of the physical environment of hospitals on safety and quality outcomes for patients and staff. A diverse range of design improvements include better use of space for improved patient vigilance and reduced steps to the point of patient care; mistake proofing and forcing functions that preclude the initiation of potentially harmful actions; standardization of facility systems, equipment, and patient rooms; in-room placement of sinks for hand hygiene; single-bed rooms for reducing infections; better ventilation systems for pathogen control; improved patient handling, transport, and prevention of falls; HIT for quick and reliable access to patient information and enhanced medication safety; appropriate and adjustable lighting; noise reduction for lowering stress; simulation suites with sophisticated mannequins that enable performance mastery of critical skills; improved signage; use of affordances and natural mapping; and greater accommodation and sensitivity to the needs of families and visitors. Reiling and colleagues30 described the design and building of a new community hospital that illustrates the deployment of patient safety-driven design principles.

A basic premise of sound design is that it starts with a thorough understanding of user requirements. A focus on the behavioral and performance requirements of a building’s occupants has generally been accepted in architecture since the early 1970s.31–33 Architects have devised methods—not dissimilar to function and task analysis techniques developed by human factors practitioners—that inventory all the activities that are performed by a building’s occupants as well as visitors. Table 2 lists just a small sample of questions that need to be asked.34, 35

Given the vast amounts of time spent on hospital units and the number of repetitive tasks performed, nurses as an occupational group are especially sensitive to building and workplace layout features that have a direct bearing on the quality and safety of care provided. When designing workplaces in clinical settings, human capabilities and limitations need to be considered with respect to distances traveled, standing and seated positions, work surfaces, the lifting of patients, visual requirements for patient monitoring, and spaces for provider communication and coordination activities. Traveling unnecessary distances to retrieve needed supplies or information is a waste of valuable time. Repetitious motor activity facilitates fatigue. Information needed by several people can be made easily accessible electronically, communication and coordination among providers can be maximized by suitable spatial arrangements, and clear lines of sight where needed can be designed for monitoring tasks.

At the time of this chapter’s writing, the U.S. hospital industry is in the midst of a major building boom for the next decade, with an estimated $200 billion earmarked for new construction. Nursing has an opportunity to play a key role in serving on design teams that seek to gain a better understanding of the tasks performed by provider personnel. By employing the accumulating evidence base, hospitals can be designed to be more effective, safe, efficient, and patient-centered. Or they can be designed in a way that repeats the mistakes of the past. Either way, the physical attributes that hospitals take will impact the quality and safety of health care delivery for years to come.

Organizational/Social Environment

As shown in the third tier of Figure 1, the organizational/social environment represents another set of latent conditions that can lie dormant for some time; yet when combined with other pathogens (to use Reason’s metaphor10), can thwart the system’s defenses and lead to error. Adverse events that have been influenced by organizational and social factors have been poorly understood due, in large part, to their delayed and dormant consequences. These are the omnipresent, but difficult to quantify factors—organizational climate, group norms, morale, authority gradients, local practices—that often go unrecognized by individuals because they are so deeply immersed in them. However, over time these factors are sure to have their impact.

In her analysis of the Challenger disaster, Vaughn36 discovered a pattern of small, incremental erosions to safety and quality that over time became the norm. She referred to this organizational/social phenomenon as normalization of deviance. Disconfirming information (i.e., information that the launch mission was not going as well as it should) was minimized and brought into the realm of acceptable risk. This served to reduce any doubt or uneasy feelings about the status of the mission and preserved the original belief that their systems were essentially safe. A similar normalization of deviance seems to have happened in health care with the benign acceptance of shortages and adverse working conditions for nurses. If a hospital can get by with fewer and fewer nurses and other needed resources without the occurrence of serious adverse consequences, these unfavorable conditions may continue to get stretched, creating thinner margins of safety, until a major adverse event occurs.

Another form of organizational fallibility is the good provider fallacy.37, 38 Nurses as a group have well-deserved professional reputations as a result of their superb work ethic, commitment, and compassion. Many, no doubt, take pride in their individual competence, resourcefulness, and ability to solve problems on the run during the daily processes of care. Yet, as fine as these qualities are, there is a downside to them. In a study of hospital work process failures (e.g., missing supplies, malfunctioning equipment, incomplete/inaccurate information, unavailable personnel), Tucker and Edmondson39 found that the failures elicited work-arounds and quick fixes by nurses 93 percent of the time, and reports of the failure to someone who might be able to do something about it 7 percent of the time. While this strategy for problem-solving satisfies the immediate patient care need, from a systems perspective it is sheer folly to focus only on the first-order problem and do nothing about the second-order problem—the contributing factors that create the first-order problem. By focusing only on first-order fixes or work-arounds and not the contributing factors, the problems simply reoccur on subsequent shifts as nurses repeat the cycle of trying to keep up with the crisis of the day. To change this shortsightedness, it is time for nurse managers and those who shape organizational climate to value some new qualities. Rather than simply valuing nurses who take the initiative, who roll with the punches while attempting quick fixes, and who otherwise “stay in their place,” it is time to value nurses who ask penetrating questions, who present evidence contrary to the view that things are alright, and who step out of a traditionally compliant role and help solve the problem-behind-the-problem. Given the vast clinical expertise and know-how of nurses, it is a great loss when organizational and social norms in the clinical work setting create a culture of low expectations and inhibit those who can so clearly help the organization learn to deliver safer, higher quality, and more patient-centered care.

Management

Conditions of poor planning, indecision, or omission, associated with managers and those in decisionmaking positions, are termed latent because they occur further upstream in Figure 1 (tier four), far away from the sharp-end activities of nurses and other providers. Decisions are frequently made in a loose, diffuse, somewhat disorderly fashion. Because decisionmaking consequences accrue gradually, interact with other variables, and are not that easy to isolate and determine, those who make organizational policy, shape organizational culture, and implement managerial decisions are rarely held accountable for the consequences of their actions. Yet managerial dictum and organizational practices regarding staffing, communication, workload, patient scheduling, accessibility of personnel, insertion of new technology, and quality assurance procedures are sure to have their impact. As noted earlier, providers are actually the last line of defense, for it is the providers who ultimately must cope with the shortcomings of everyone else who has played a role in the design of the greater sociotechnical system. For example, the absence of a serious commitment to higher quality and safe care at the management level is a latent condition that may become apparent in terms of adverse consequences only when this “error of judgment” aligns itself with other system variables such as overworked personnel, excessive interruptions, poorly designed equipment interfaces, a culture of low expectations, and rapid-paced production schedules for treating patients.

Compared to providers, managers and decisionmakers are much better positioned to actually address the problems-behind-the-problem and be mindful of the interdependencies of care. Managers and decisionmakers have the opportunity to work across organizational units of care and address the discontinuities. With perhaps a few exceptions, there is very little evidence that managers and leaders actually spend much time in attending to the complex interdependencies of care and areas of vulnerability in their institutions. While they may not have the same clinical know-how as sharp end personnel, they certainly have the corporate authority to involve those with clinical expertise in needed change efforts. Thus, a new role for health care leaders and managers is envisioned, placing a high value on understanding system complexity and focusing on the interdependencies—not just the components.38 In this new role, leaders recognize that superb clinical knowledge and dedication of providers is no match for the toll that flawed and poorly performing interdependent systems of care can take. In brief, they aim to do something about the misalignments.

The External Environment

Lest it seem that the authors are being a bit harsh on management, it needs to be recognized that there are external forces exerting their influence at this level. From a systems perspective, one must not simply repeat the blame game and lay all the responsibility for health care delivery problems at the feet of management. Health care is an open system, and, as shown in Figure 1, each system level subsumes lower systems and gets subsumed by higher systems in return. Subsuming the management level and the more distal downstream levels is the external environment, which perhaps is best portrayed as a shifting mosaic of economic pressures, political climates and policies, scientific and technological advancements, and changing demographics. For those that toil at the sharp end, these diffuse, broad-based, and shifting forces may seem less relevant because of their more remote or indirect impact. While this is understandable, the impact of these forces is undeniable. The external environment influences patient safety and quality of care by shaping the context in which care is provided. A salient characteristic of our dynamic 21st-century society is that these external forces are stronger and change more frequently than ever before.7 For providers and health care decisionmakers to stay ahead of these forces (rather than getting rolled over by them) and gain more proactive leverage to help shape the ensuing changes, it is first necessary to gain a better understanding of the external forces that are operating.

Not only is the scientific foundation of nursing and medicine expanding significantly (e.g., consider advances in genomics, neuroscience, immunology, and the epidemiology of disease), there is a corresponding need to master different procedures associated with new drug armamentariums, new imaging technologies, and new minimally invasive surgical interventions.40 The groundwork is currently being laid for pay-for-performance to become a reality in the near future. Safety, efficiency, and high-quality care will serve as a basis for medical reimbursement, not just services rendered, as is currently the case. Two demographic trends are converging causing serious alarm. Nursing, as a profession, continues to experience shortages and discontent just as an aging baby boom population with a plethora of chronic and acute care needs starts to occupy a wide range of care settings. Medical practice has been steadily shifting from inpatient to outpatient settings. Economic incentives to move patients out of hospitals as soon as possible continue, and as noted earlier, there is a concurrent migration of sophisticated medical devices into the home despite fears that the home care environment may not be suitable for safe and effective medical device use. With continued cost-containment concerns and pressures on clinicians to be as productive as possible, the clinical setting becomes a less ideal place to acquire clinical skills from senior staff. Currently simulation techniques are receiving active investigation and may provide an alternative means of acquiring and maintaining clinical skills. At the same time, with a growing proportion of the population composed of minorities, greater sensitivity and tailored approaches directed toward those less well served by the health system will be needed.

Unlike other sectors of the economy, health care remained untouched for too long by advances in information technology (except, perhaps, for billing purposes). That is no longer the case, given the recent implementation of electronic health records, computer physician order entry systems, barcoding systems, and other technologies by early adopters. However, lofty expectations that usher in new technology are quickly dampened by unintended consequences.41, 42 One of the early lessons learned is that successful implementation involves more than just technical considerations—the nature of clinical work, the design of well-conceived interfaces, workflow considerations, user acceptance and adoption issues, training, and other organizational support requirements all need to be taken into account. Still another external development that will likely have an impact on clinical practice in the years to come is the passage of the Patient Safety and Quality Improvement Act of 2005. It provides confidentiality protections and encourages providers to contract with patient safety organizations (PSOs) for the purpose of collecting and analyzing data on patient safety events so that information can be fed back to providers to help reduce harm to patients. With the confidentiality protections mandated by the act, providers should be able to report patient safety events freely without fear of reprisal or litigation. Finally, given the availability of numerous medical Web sites and a national press network sensitized to instances of substandard clinical care and medical error, today’s patients are better informed and a bit less trusting with respect to their encounters with the health system.

What Can Nurses Do?

Considering all the system factors (and we have only identified some of them), a normal reaction probably is to feel a bit overwhelmed by the demanding and complex clinical environment in which nurses find themselves. Given the hierarchical and complex nature of system factors identified and the unanticipated ways they can interact, a reasonable question is, “What can nurses do?” The answer, in part, comes from learning to manage the unexpected1—a quality of high-reliability organizations (HROs) that many health care organizations are currently learning to adopt. In brief, HROs are those organizations that have sustained very impressive safety records while operating in very complex and unkind environments (e.g., aircraft carriers, nuclear power, firefighting crews), where the risk of injury to people and damage to expensive equipment or the environment is high. A key characteristic on the part of workers in HROs is that of mindfulness—a set of cognitive processes that allows individuals to be highly attuned to the many ways things can go wrong in unkind environments and ways to recover from them. Workers in HROs are qualitatively different and continuously mindful of different things compared to workers in less reliable organizations. Table 3 describes the five mindfulness processes that define the core components of HROs and the implications for nursing. For a fuller account of HROs, interested readers are encouraged to access the original source.43

It should be noted that not everyone in health care has been receptive to comparisons between health care delivery and the activities that take place in other high-risk industries such as aircraft carrier operations or nuclear power. Health care is not aviation; it is more complex and qualitatively different. While all of this may be true, it probably also is true that health care is the most poorly managed of all the high-risk industries and very late in coming to recognize the importance of system factors that underlie adverse events. The one thing that the other high-risk industries clearly have in common with health care is the human component. Sailors that work the decks of aircraft carriers have the same physiologies as those who work the hospital floor. They get fatigued from excessive hours of operation in the same way as those who occupy the nurses’ station. When the technology and equipment they use is poorly designed and confusing to use, they get frustrated and make similar types of mistakes as those in health care who have to use poorly designed medical devices. When the pace of operations pick up and they are bombarded with interruptions, short-term memory fails them in exactly the same way that it fails those who work in hectic EDs and ICUs. They respond to variations in the physical environment (e.g., lighting, noise, workplace layout) and to social/organizational pressures (e.g., group norms, culture, authority gradients) in a very similar fashion to those in health care who are exposed to the same set of factors. While the nature of the work may be dramatically different, the types of system factors that influence human performance are indeed very similar. The take-home message of all this is that the human factors studies that have been conducted in the other high-risk industries are very relevant to health care, and nursing in particular, as we continue to learn to improve the skills, processes, and system alignments that are needed for higher quality and safer care.

Conclusion

The complex and demanding clinical environment of nurses can be made a bit more understandable and easier in which to deliver care by accounting for a wide range of human factors concerns that directly and indirectly impact human performance. Human factors is the application of scientific knowledge about human strengths and limitations to the design of systems in the work environment to ensure safe and satisfying performance. A human factors framework such as that portrayed in Figure 1 helps us become aware of the salient components and their relationships that shape and influence the quality of care that is provided to patients. The concept of human error is a somewhat loaded term. Rather than falling into the trap of uncritically focusing on human error and searching for individuals to blame, a systems approach attempts to identify the contributing factors to substandard performance and find ways to better detect, recover from, or preclude problems that could result in harm to patients. Starting with the individual characteristics of providers such as their knowledge, skills, and sensory/physical capabilities, we examined a hierarchy of system factors, including the nature of the work performed, the physical environment, human-system interfaces, the organizational/social environment, management, and external factors. In our current fragmented health care system, where no single individual or entity is in charge, these multiple factors seem to be continuously misaligned and interact in a manner that leads to substandard care. These are the proverbial accidents in the system waiting to happen. Nurses serve in a critical role at the point of patient care; they are in an excellent position to not only identify the problems, but to help identify the problems-behind-the-problems. Nurses can actively practice the tenets of high-reliability organizations. It is recognized, of course, that nursing cannot address the system problems all on its own. Everyone who has a potential impact on patient care, no matter how remote (e.g., device manufacturers, administrators, nurse managers), needs to be mindful of the interdependent system factors that they play a role in shaping. Without a clear and strong nursing voice and an organizational climate that is conducive to candidly addressing system problems, efforts to improve patient safety and quality will fall short of their potential.

References

- 1.

- Kohn LT, Corrigan JM, Donaldson MS, editors. A report of the Committee on Quality of Health Care in America, Institute of Medicine . Washington, DC: National Academy Press; 2000. To err is human: building a safer health system. [PubMed: 25077248]

- 2.

- Sanders M, McCormick E. Human factors engineering and design . New York: McGraw-Hill; 1993.

- 3.

- Chapanis A, Garner W, Morgan C. Applied experimental psychology: human factors in engineering design. New York: Wiley; 1985.

- 4.

- Henriksen K, Kaye R, Morisseau D. Industrial ergonomic factors in the radiation oncology therapy environment. In: Nielsen R, Jorgensen K, editors. Advances in industrial ergonomics and safety V . Washington, DC: Taylor and Francis; 1993. pp. 267–74.

- 5.

- Vincent C, Adams S, Stanhope N. A framework for the analysis of risk and safety in medicine. BMJ. 1998;316:1154–7. [PMC free article: PMC1112945] [PubMed: 9552960]

- 6.

- Carayon P, Smith M. Work organization and ergonomics. Appl Ergon. 2000;31(6):649–61. [PubMed: 11132049]

- 7.

- Vicente K. The human factor . New York: Routledge; 2004.

- 8.

- Schyve P. Systems thinking and patient safety. In: Henriksen K, Battles J, Marks E, Lewin D, editors. Advances in patient safety: from research to implementation. Vol. 2. Rockville, MD: Agency for Healthcare Research and Quality; 2005. pp. 1–4.

- 9.

- Fischhoff B. Hindsight ≠ foresight: the effect of outcome knowledge on judgment under uncertainty. J Exp Psychol. 1975;1:288–99. [PMC free article: PMC1743746] [PubMed: 12897366]

- 10.

- Reason J. Human error . Cambridge: Cambridge University Press; 1990.

- 11.

- Henriksen K, Kaplan H. Hindsight bias, outcome knowledge, and adaptive learning. Qual Saf Health Care. 2003;12(Suppl II):ii46–ii50. [PMC free article: PMC1765779] [PubMed: 14645895]

- 12.

- Dekker S. The field guide to human error investigations . Burlington, VT: Ashgate Publishing Ltd; 2002.

- 13.

- Jones E, Nisbett R. The actor and the observer: Divergent perceptions on the causes of behavior . New York: General Learning Press; 1971.

- 14.

- Rasmussen J. The definition of human error and a taxonomy for technical system design. In: Rasmussen J, Duncan K, Lepalt J, editors. New technology and human error. Indianapolis, IN: John Wiley and Sons; 1987. pp. 23–30.

- 15.

- Perrow C. Normal accidents—living with high-risk technologies . New York: Basic Books; 1984.

- 16.

- Reason J, Carthey J, deLeval M. Diagnosing “vulnerable system syndrome”: an essential prerequisite to effective risk management. Qual Health Care. 2001;10(Suppl II):ii21–ii25. [PMC free article: PMC1765747] [PubMed: 11700375]

- 17.

- Reason J. Managing the risks of organizational accidents . Burlington, VT: Ashgate; 1997.

- 18.

- Cook R, Woods D. Operating at the sharp end: the complexity of human error. In: Bogner M, editor. Human error in medicine. Hillsdale, NJ: Lawrence Erlbaum Associates, Inc; 1994. pp. 255–310.

- 19.

- Henriksen K, Kaye R, Jones R, et al. US Nuclear Regulatory Commission NUREG/CR-6277. Vol. 5. Washington, DC: Taylor and Francis; 1995. Human factors evaluation of teletherapy: literature review.

- 20.

- Hendrickson F. The four P’s of human error in treatment delivery. Int J Radiat Oncol Biol Phys. 1982;8:311–2. [PubMed: 711561]

- 21.

- Swann-D’Emilia B, Chu J, Daywalt J. Misadministration of prescribed radiation dose. Med Dosim. 1990;15:185–91. [PubMed: 2073331]

- 22.

- Cannon-Bowers J, Salas E. Making decisions under stress: implications for individual and team training. Washington, DC: American Psychological Association; 1998.

- 23.

- Human performance and productivity. In: Fleishman E, editor; Alluisi E, Fleishman E, editors. Stress and performance effectiveness. Vol. 3. Hillsdale, NJ: Lawrence Erlbaum Associates; 1982.

- 24.

- Wickens C, Hollands J. Engineering psychology and human performance. 3rd ed. Upper Saddle River NJ; Prentice Hall; 2000.

- 25.

- Salvendy G, editor. Handbook of human factors. New York: John Wiley and Sons, Inc; 1987.

- 26.

- Henriksen K. Macroergonomic interdependence in patient safety research. Paper presented at XVth Triennial Congress of the International Ergonomics Association and the 7th Joint Conference of Ergonomics Society of Korea/Japan Ergonomics Society; August 2003; Seoul, Korea.

- 27.

- Henriksen K. Human factors and patient safety: continuing challenges. In: Carayon P, editor. The handbook of human factors and ergonomics in health care and patient safety. Mahway, NJ: Lawrence Erlbaum Associated Inc; 2006. pp. 21–37.

- 28.

- ECRI. Preventing misconnections of lines and cables. Health Devices. 2006;35(3):81–95. [PubMed: 16610452]

- 29.

- Ulrich R, Simring C, Quan X, et al. Report to the Robert Wood Johnson Foundation. Princeton, NJ: Robert Wood Johnson Foundation; 2004. The role of the physician environment in the hospital of the 21st century: a once-in-a-lifetime opportunity.

- 30.

- Reiling J, Knutzen B, Wallen T, et al. Enhancing the traditional hospital design process: a focus on patient safety. Jt Comm J Qual Saf. 2004;30(3):115–24. [PubMed: 15032068]

- 31.

- Wright JR. Performance criteria in buildings. Sci Am. 1971;224(3):17–25.

- 32.

- Brill M. Evaluating building on a performance basis. In: Lang J, editor. Designing for human behavior: architecture and the behavioral sciences (Community Development Series). Stroudsburg, PA: Dowden, Hutchinson and Ross; 1974.

- 33.

- Cronberg T. Performance requirements for buildings—a study based on user activities. Swedish Council for Building Research D3. 1975

- 34.

- Harrigan J. Architecture and interior design. In: Slavendy G, editor. The handbook of human factors. New York: John Wiley and Sons; 1987. pp. 742–64.

- 35.

- Harrigan J, Harrigan J. Human factors program for architects, interior designers and clients. San Luis Obispo, CA: Blake Printery; 1979.

- 36.

- Vaughan D. The challenger launch decision–risky technology, culture, and deviance at NASA . Chicago, IL: The University of Chicago Press; 1996.

- 37.

- Wears R. Oral remarks at the SEIPS short course on human factors engineering and patient safety—Part I . Madison, WI: University of Wisconsin-Madison; 2004.

- 38.

- Henriksen K, Dayton E. Organizational silence and hidden threats to patient safety. Health Serv Res. 2006;41(4p2):1539–54. [PMC free article: PMC1955340] [PubMed: 16898978]

- 39.

- Tucker A, Edmondson A. Why hospitals don’t learn from failures: organizational and psychological dynamics that inhibit system change. Calif Manage Rev. 2003;45:55–72.

- 40.

- Task Force on Academic Health Centers Training tomorrow’s doctors—the medical education mission of academic health centers . The Commonwealth Fund; New York: The Commonwealth Fund; 2002.

- 41.

- Koppel R, Metlay JP, Cohen A, et al. Role of computerized physician order entry systems in facilitating medication errors. JAMA. 2005;293:1197–203. [PubMed: 15755942]

- 42.

- Wears RL, Berg M. Computer technology and clinical work: still waiting for Godot. JAMA. 2005;293:1261–3. [PubMed: 15755949]

- 43.

- Weick K, Sutcliffe K. Managing the unexpected: assuring high performance in an age of complexity . San Francisco: Jossey-Bass; 2001.

Figures

Figure 1

Contributing Factors to Adverse Events in Health Care

Figure 2

The “Swiss Cheese” Model of Accident Causation

Source: Reason J, Carthey J, deLeval M. Diagnosing “vulnerable system syndrome”: An essential prerequisite to effective risk management. Qual Health Care, 2001; 10(Suppl. II):ii21–ii25. Reprinted with permission of the BMJ Publishing Group.

Tables

Table 1

Sociotechnical System Models

| Authors | Elements of Model |

|---|---|

| Henriksen, Kaye, Morisseau 19934 |

|

| Vincent 19985 |

|

| Carayon, Smith 20006 |

|

Table 2

Determining Activities Performed by Building Occupants and Visitors

|

|

|

|

|

|

Table 3

A State of Mindfulness for Nurses

| Core process | Explanation/Implication for Nursing |

|---|---|

| Preoccupation with failure | Adverse events are rare in HROs, yet these organizations focus incessantly on ways the system can fail them. Rather than letting success breed complacency, they worry about success and know that adverse events will indeed occur. They treat close calls as a sign of danger lurking in the system. Hence, it is a good thing when nurses are preoccupied with the many ways things can go wrong and when they share that “inner voice of concern” with others. |

| Reluctance to simplify interpretations | When things go wrong, less reliable organizations find convenient ways to circumscribe and limit the scope of the problem. They simplify and do not spend much energy on investigating all the contributing factors. Conversely, HROs resist simplified interpretations, do not accept conventional explanations that are readily available, and seek out information that can disconfirm hunches and popular stereotypes. Nurses who develop good interpersonal, teamwork, and critical-thinking skills will enhance their organization’s ability to accept disruptive information that disconfirms preconceived ideas. |

| Sensitivity to operations | Workers in HROs do an excellent job of maintaining a big picture of current and projected operations. Jet fighter pilots call it situational awareness; surface Navy personnel call it maintaining the bubble. By integrating information about operations and the actions of others into a coherent picture, they are able to stay ahead of the action and can respond appropriately to minor deviations before they result in major threats to safety and quality. Nurses also demonstrate excellent sensitivity to operations when they process information regarding clinical procedures beyond their own jobs and stay ahead of the action rather than trying to catch up to it. |

| Commitment to resilience | Given that errors are always going to occur, HROs commit equal resources to being mindful about errors that have already occurred and to correct them before they worsen. Here the idea is to reduce or mitigate the adverse consequences of untoward events. Nursing already shows resilience by putting supplies and recovery equipment in places that can be quickly accessed when patient conditions go awry. Since foresight always lags hindsight, nursing resilience can be honed by creating simulations of care processes that start to unravel (e.g., failure to rescue). |

| Deference to expertise | In managing the unexpected, HROs allow decisions to migrate to those with the expertise to make them. Decisions that have to be made quickly are made by knowledgeable front-line personnel who are closest to the problem. Less reliable organizations show misplaced deference to authority figures. While nurses, no doubt, can cite many examples of misplaced deference to physicians, there are instances where physicians have assumed that nurses have the authority to make decisions and act, resulting in a diffusion of responsibility. When it comes to decisions that need to be made quickly, implicit assumptions need to be made explicit; rules of engagement need to be clearly established; and deference must be given to those with the expertise, resources, and availability to help the patient. |